Proceedings of Machine Learning Research 182:1–24, 2022 Machine Learning for Healthcare

Contrastive Learning of Medical Visual Representations

from Paired Images and Text

Yuhao Zhang

∗

[email protected]anford.edu

Biomedical Informatics Training Program, Stanford University

Hang Jiang

∗

hjian42@stanford.edu

Symbolic Systems Program, Stanford University

Yasuhide Miura

†

ysmiura@stanford.edu

Computer Science Department, Stanford University

Christopher D. Manning manning@stanford.edu

Computer Science and Linguistics Departments, Stanford University

Curtis P. Langlotz langlotz@stanford.edu

Department of Radiology, Stanford University

Abstract

Learning visual representations of medical images (e.g., X-rays) is core to medical image

understanding but its progress has been held back by the scarcity of human annotations.

Existing work commonly relies on fine-tuning weights transferred from ImageNet pretrain-

ing, which is suboptimal due to drastically different image characteristics, or rule-based

label extraction from the textual report data paired with medical images, which is inaccu-

rate and hard to generalize. Meanwhile, several recent studies show exciting results from

unsupervised contrastive learning from natural images, but we find these methods help

little on medical images because of their high inter-class similarity. We propose ConVIRT,

an alternative unsupervised strategy to learn medical visual representations by exploiting

naturally occurring paired descriptive text. Our new method of pretraining medical image

encoders with the paired text data via a bidirectional contrastive objective between the

two modalities is domain-agnostic, and requires no additional expert input. We test Con-

VIRT by transferring our pretrained weights to 4 medical image classification tasks and

2 zero-shot retrieval tasks, and show that it leads to image representations that consid-

erably outperform strong baselines in most settings. Notably, in all 4 classification tasks,

our method requires only 10% as much labeled training data as an ImageNet initialized

counterpart to achieve better or comparable performance, demonstrating superior data

efficiency.

∗

The first two authors contributed equally. YZ is now affliated with AWS AI Labs, while the work was

done before his current affiliation. HJ is now affliated with Massachusetts Institute of Technology.

†

YM is now affiliated with FUJIFILM Corporation.

© 2022 Y. Zhang, H. Jiang, Y. Miura, C.D. Manning & C.P. Langlotz.

Contrastive Learning of Medical Visual Representations from Paired Images and Text

1. Introduction

Medical image understanding has the potential to transform healthcare and has seen rapid

progress with deep learning (Gulshan et al., 2016; Esteva et al., 2017; De Fauw et al.,

2018; Rajpurkar et al., 2018b). Yet, with expert-level performance achieved only in some

specialties and under some circumstances, medical image understanding remains a difficult

task, with classifications dependent on subtle visual distinctions in overall similar images.

This is further exacerbated by the extreme scarcity of annotated data.

Severe cardiomegaly

is noted in the image

with enlarged…

Radiograph shows

pleural effusion in

the right…

Figure 1: Two example chest X-ray images with different abnormality categories, along

with sentences from their paired textual report and example views indicative of their char-

acteristics.

Existing work has followed two general approaches to obtain annotations for medical

imaging tasks. The first approach has been using high-quality annotations created by med-

ical experts (Abr`amoff et al., 2016; Gulshan et al., 2016; Shih et al., 2019; Wang and Wong,

2020). However, the high cost of this approach has resulted in datasets that are mostly

orders of magnitude smaller than natural image datasets such as ImageNet (Russakovsky

et al., 2015). To remedy this, existing work has relied heavily on transferring model weights

from ImageNet pretraining (Wang et al., 2017; Esteva et al., 2017; Irvin et al., 2019). This

approach is suboptimal because, as shown in Figure 1, medical image understanding often

requires representations of very fine-grained visual features that are drastically different

from those required for identifying objects in natural images. As a result, Raghu et al.

(2019) found that ImageNet pretraining often provides little to no benefit compared to

simple random initialization.

A second popular approach is to use expert-crafted rules to extract labels from the

textual reports accompanying the images. This approach has led to datasets of larger

scale, since the text data paired with medical images are often produced naturally by

medical experts in their routine workflow and abundant in a typical hospital’s IT systems.

Nevertheless, this rule-based label extraction approach has two key limitations: 1) the

rules are often inaccurate and limited to a few categories (Wang et al., 2017), leading to

very inefficient use of the textual report data; 2) these rules are often domain-specific and

sensitive to the style of the text, making cross-domain and cross-institution generalization

difficult (Irvin et al., 2019).

2

Contrastive Learning of Medical Visual Representations from Paired Images and Text

In efforts to make more efficient use of unlabeled image data, several recent studies have

shown promising results from contrastive representation learning from natural images (Chen

et al., 2020a; He et al., 2020; Grill et al., 2020). However, as we will show, applying these

image view–based contrastive methods to medical images provides only marginal benefits

compared to ImageNet pretraining, a result mostly due to the high inter-class similarity of

the medical images as in Figure 1.

In this work, we introduce a new method to improve visual representation learning on

medical images by combining the benefits of both learning from abundant textual data

and unsupervised statistical approaches. We present Contrastive VIsual Representation

Learning from Text (ConVIRT), a framework for learning visual representations by exploit-

ing the naturally occurring pairing of images and textual data. ConVIRT improves visual

representations by maximizing the agreement between true image-text pairs versus random

pairs via a bidirectional contrastive objective between the image and text modalities. We

apply ConVIRT to the pretraining of medical image encoders, and show that it leads to

higher-quality in-domain image representations that capture the subtlety of visual features

required for medical image understanding tasks.

Compared to existing methods, ConVIRT has the advantages of utilizing the paired

text data in a way agnostic to the medical specialty and requiring no additional expert

input. This allows us to evaluate ConVIRT by transferring our pretrained encoder weights

to 4 different medical image classification tasks covering 2 medical specialties. We find that

the resulting models outperform all baseline initialization approaches, including the widely

used ImageNet pretraining and strong baselines that also utilize the paired text data. It

further improves upon popular image-only unsupervised learning methods such as SimCLR

(Chen et al., 2020a) and MoCo v2 (Chen et al., 2020b). Most notably, in all 4 classification

tasks, ConVIRT requires only 10% as much labeled training data as an ImageNet initialized

counterpart to achieve better or comparable performance. We further evaluate ConVIRT

on two new zero-shot retrieval tasks, an image-image and a text-image retrieval task, and

also find it superior to all baselines.

Since its original release in 2020, ConVIRT has directly inspired subsequent studies

such as the CLIP framework (Radford et al., 2021) and the ALIGN model (Jia et al., 2021),

which showed that direct adaptations of ConVIRT-style pretraining at much larger scales

lead to state-of-the-art general visual recognition capabilities. To facilitate future research,

we make our model and the collected retrieval datasets

1

publicly available.

1.1. Generalizable Insights about Machine Learning in the Context of

Healthcare

Healthcare data is usually scarce and costly to annotate compared to data in the general

domain. As a result, machine learning models built with a single modality of healthcare

data often face the generalization challenge due to small sample sizes of training data.

Meanwhile, healthcare data is often naturally paired with multimodal clinical features,

including text descriptions or patient metadata, which can be exploited to reduce the cost

of building reliable machine learning models. Our method, ConVIRT, demonstrates an

application of this idea to learning robust medical image encoders by reusing descriptive

1. https://github.com/yuhaozhang/convirt

3

Contrastive Learning of Medical Visual Representations from Paired Images and Text

text naturally produced by experts via a cross-modality learning framework. We show

that this simple method can greatly benefit downstream predictive tasks with reduced

annotation cost. Since the release of our work, similar image-text pretraining strategies

have been used to improve more downstream healthcare tasks including image regeneration

(Wang et al., 2021), medical visual question answering (Eslami et al., 2021) and clinical

risk prediction (Zang and Wang, 2021), etc. Moreover, a similar idea can be extended to

include other modalities of healthcare data, including multiomics data (Han et al., 2021)

or patient metadata (Vu et al., 2021), for more robust and cost-effective machine learning

applications in the healthcare domain.

2. Related Work

Our work is most relevant to work on medical image classification, which we have discussed

in Section 1, and textual report generation from medical images (Wang et al., 2018; Jing

et al., 2018; Liu et al., 2019; Miura et al., 2021). A dominant approach for initializing medical

image encoders in relevant studies has been using encoder weights pretrained on ImageNet,

despite the drastic difference in image characteristics (Raghu et al., 2019). Instead, we

propose an alternative in-domain pretraining strategy for medical imaging and compare

different pretraining approaches that also use the paired medical reports. Our work is

inspired by the recent line of work on image view-based contrastive learning (H´enaff et al.,

2020; Chen et al., 2020a; He et al., 2020; Grill et al., 2020; Sowrirajan et al., 2021; Azizi et al.,

2021), but fundamentally differs from existing studies by exploiting contrastive learning

using the text modality. As we show in Section 6, the added semantics from the text

data makes contrastive learning more effective in learning high-quality representations of

medical images. To our knowledge, our work represents the first systematic attempt in this

direction.

Another line of work related to ours is visual-linguistic representation learning (Lu et al.,

2019; Tan and Bansal, 2019; Su et al., 2020). Among existing studies, Ilharco et al. (2021)

and Gupta et al. (2020) explored cross-modality contrastive objectives related to ours, but

for the purpose of probing visual-linguistic models and learning phrase grounding, respec-

tively. Our work differs from most work in visual-linguistic pretraining in several crucial

ways: 1) existing work in visual-linguistic learning focused on learning visual representations

from paired text via a binary contrastive prediction task, whereas we contribute by showing

the superior performance of the new cross-modality NCE objectives in improving visual

representations; 2) existing work has primarily relied on object representations extracted

from image segmentation models in their preprocessing steps, making them less applicable

to medical image understanding tasks where anatomical segmentations are extremely hard

to obtain; 3) while existing work has run evaluation primarily on visual-linguistic tasks such

as visual question answering, we instead focus on evaluation with classification and retrieval

tasks which are at the center of medical image understanding research.

Several concurrent papers have studied the problem of learning visual representations

from text data (Sariyildiz et al., 2020; Desai and Johnson, 2021) on general-domain image

problems. Most notably, since the original release of our work, ConVIRT has been applied

at larger scales in several general visual recognition studies, including the CLIP model

(Radford et al., 2021), which uses a simplified version of the ConVIRT approach, and the

4

Contrastive Learning of Medical Visual Representations from Paired Images and Text

ALIGN model by Jia et al. (2021). These successful applications have confirmed that

ConVIRT is a promising strategy for learning visual representations from human-written

descriptive text, and that it has the potential to further advance the state of the art for

visual recognition tasks.

There are also subsequent studies which mainly focused on medical-domain image prob-

lems. To the best of our knowledge, ConVIRT was the first work that leverages text-image

contrastive loss for pretraining medical visual representations and was followed by numer-

ous papers (Heiliger et al., 2022) that apply multimodal contrastive learning to the medical

imaging domain. Wang et al. (2021) demonstrated the feasibility of such a pretraining strat-

egy across mixed data inputs (image-only, text-only, image-text pairs) in three chest X-ray

applications (i.e., classification, retrieval, and image regeneration). M¨uller et al. (2021)

proposed a similar method, LoVT, for localized medical imaging tasks. Huang et al. (2021)

adapted our method and further proposed GloRIA to contrast image sub-regions and words

in the paired report. Liao et al. (2021) trained image and text encoders by encouraging

the resulting representations to exhibit high local mutual information. Eslami et al. (2021)

proposed PubMedCLIP to better adapt CLIP to the Medical Visual Question Answering

(MedVQA) task. Zang and Wang (2021) applied a similar contrastive learning framework

to clinical risk prediction based on longitudinal electronic health records. Han et al. (2021)

extended ConVIRT to use radiomics features and contrastive learning for pneumonia de-

tection, and Vu et al. (2021) selected positive pairs coming from views of possibly different

images through the use of patient metadata.

3. Methods

3.1. Task Definition

We start by defining our representation learning setting. We assume paired input (x

v

, x

u

)

where x

v

represents one or a group of images, and x

u

represents a text sequence which de-

scribes the imaging information in x

v

. Our goal is to learn a parameterized image encoder

function f

v

, which maps an image to a fixed-dimensional vector. We are then interested in

transferring the learned image encoder function f

v

into downstream tasks, such as classifi-

cation or image retrieval. In this work, we model the encoder function f

v

as a convolutional

neural network (CNN).

We note that paired image-text data (x

v

, x

u

) naturally exists for many medical domains.

Medical experts such as radiologists produce textual descriptions of images as part of their

routine workflow, some of which are also made publicly available (Demner-Fushman et al.,

2016; Johnson et al., 2019).

3.2. Contrastive Visual Representation Learning from Text

An overview of our method, ConVIRT, for learning f

v

is shown in Figure 2. At a high level,

our method converts each input image x

v

and text x

u

into d-dimensional vector representa-

tions v and u respectively, following a similar processing pipeline. For each input image x

v

,

our method starts by drawing a random view

˜

x

v

from x

v

with a sampled transformation

function t

v

∼ T , where T represents a family of stochastic image transformation functions

described later. Next, the encoder function f

v

transforms

˜

x

v

into a fixed-dimensional vector

5

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Image

Encoder

g

v

<latexit sha1_base64="GAV4YTVulUgyr5GIfU91UIjKRos=">AAAB6nicbVBNS8NAEJ3Ur1q/qh69LBbBU0lUtN4KXjxWtB/QhrLZbtKlm03Y3RRK6E/w4kERr/4ib/4bN2kQtT4YeLw3w8w8L+ZMadv+tEorq2vrG+XNytb2zu5edf+go6JEEtomEY9kz8OKciZoWzPNaS+WFIcep11vcpP53SmVikXiQc9i6oY4EMxnBGsj3QfD6bBas+t2DrRMnILUoEBrWP0YjCKShFRowrFSfceOtZtiqRnhdF4ZJIrGmExwQPuGChxS5ab5qXN0YpQR8iNpSmiUqz8nUhwqNQs90xliPVZ/vUz8z+sn2m+4KRNxoqkgi0V+wpGOUPY3GjFJieYzQzCRzNyKyBhLTLRJp5KHcJ3h8vvlZdI5qzvn9fO7i1qzUcRRhiM4hlNw4AqacAstaAOBAB7hGV4sbj1Zr9bborVkFTOH8AvW+xdwJI4B</latexit>

g

u

<latexit sha1_base64="6oNZ+7ql+oIxts8Fb7qUG/jNIc4=">AAAB6nicbVBNS8NAEJ3Ur1q/qh69LBbBU0msaL0VvHisaD+gDWWz3aRLN5uwuxFK6E/w4kERr/4ib/4bN2kQtT4YeLw3w8w8L+ZMadv+tEorq2vrG+XNytb2zu5edf+gq6JEEtohEY9k38OKciZoRzPNaT+WFIcepz1vep35vQcqFYvEvZ7F1A1xIJjPCNZGugtGyahas+t2DrRMnILUoEB7VP0YjiOShFRowrFSA8eOtZtiqRnhdF4ZJorGmExxQAeGChxS5ab5qXN0YpQx8iNpSmiUqz8nUhwqNQs90xliPVF/vUz8zxsk2m+6KRNxoqkgi0V+wpGOUPY3GjNJieYzQzCRzNyKyARLTLRJp5KHcJXh4vvlZdI9qzuNeuP2vNZqFnGU4QiO4RQcuIQW3EAbOkAggEd4hheLW0/Wq/W2aC1Zxcwh/IL1/gVuoI4A</latexit>

Heart size is enlarged…

No abnormality seen …

Clear consolidation at…

t

v

<latexit sha1_base64="BJCglQUP4yO10CbmipceSn1wMIg=">AAAB6nicbVDLSgNBEOyNrxhfUY9eBoPgKeyqaLwFvHiMaB6QLGF2MpsMmX0w0xsIIZ/gxYMiXv0ib/6Ns5tF1FjQUFR1093lxVJotO1Pq7Cyura+UdwsbW3v7O6V9w9aOkoU400WyUh1PKq5FCFvokDJO7HiNPAkb3vjm9RvT7jSIgofcBpzN6DDUPiCUTTSPfYn/XLFrtoZyDJxclKBHI1++aM3iFgS8BCZpFp3HTtGd0YVCib5vNRLNI8pG9Mh7xoa0oBrd5adOicnRhkQP1KmQiSZ+nNiRgOtp4FnOgOKI/3XS8X/vG6Cfs2diTBOkIdsschPJMGIpH+TgVCcoZwaQpkS5lbCRlRRhiadUhbCdYrL75eXSeus6pxXz+8uKvVaHkcRjuAYTsGBK6jDLTSgCQyG8AjP8GJJ68l6td4WrQUrnzmEX7DevwCD8o4O</latexit>

t

u

<latexit sha1_base64="Iknv9Lcla4+yIPheV6JLCuRo+9U=">AAAB6nicbVBNS8NAEJ3Ur1q/qh69BIvgqaRWtN4KXjxWtB/QhrLZbtqlm03YnQgl9Cd48aCIV3+RN/+NmzSIWh8MPN6bYWaeFwmu0XE+rcLK6tr6RnGztLW9s7tX3j/o6DBWlLVpKELV84hmgkvWRo6C9SLFSOAJ1vWm16nffWBK81De4yxibkDGkvucEjTSHQ7jYbniVJ0M9jKp5aQCOVrD8sdgFNI4YBKpIFr3a06EbkIUcirYvDSINYsInZIx6xsqScC0m2Snzu0To4xsP1SmJNqZ+nMiIYHWs8AznQHBif7rpeJ/Xj9Gv+EmXEYxMkkXi/xY2Bja6d/2iCtGUcwMIVRxc6tNJ0QRiiadUhbCVYqL75eXSeesWqtX67fnlWYjj6MIR3AMp1CDS2jCDbSgDRTG8AjP8GIJ68l6td4WrQUrnzmEX7DevwCCbo4N</latexit>

x

v

<latexit sha1_base64="yBFfOEP6JAF7+nJV5Bt8G31S8l4=">AAAB83icbVDLSsNAFL2pr1pfVZduBovgqiQqWncFNy4r2Ac0oUymk3boZBJmJsUS+htuXCji1p9x5984SYOo9cDA4Zx7uWeOH3OmtG1/WqWV1bX1jfJmZWt7Z3evun/QUVEiCW2TiEey52NFORO0rZnmtBdLikOf064/ucn87pRKxSJxr2cx9UI8EixgBGsjuW6I9dgP0of5YDqo1uy6nQMtE6cgNSjQGlQ/3GFEkpAKTThWqu/YsfZSLDUjnM4rbqJojMkEj2jfUIFDqrw0zzxHJ0YZoiCS5gmNcvXnRopDpWahbyazjOqvl4n/ef1EBw0vZSJONBVkcShIONIRygpAQyYp0XxmCCaSmayIjLHERJuaKnkJ1xkuv7+8TDpndee8fn53UWs2ijrKcATHcAoOXEETbqEFbSAQwyM8w4uVWE/Wq/W2GC1Zxc4h/IL1/gWoupIy</latexit>

x

u

<latexit sha1_base64="Nb5HKUZQBqKf5+DJLkiWYRSTWCQ=">AAAB83icbVDLSsNAFL2pr1pfVZduBovgqiRWtO4KblxWsA9oQplMJ+3QySTMQyyhv+HGhSJu/Rl3/o1JGkStBwYO59zLPXP8mDOlbfvTKq2srq1vlDcrW9s7u3vV/YOuiowktEMiHsm+jxXlTNCOZprTfiwpDn1Oe/70OvN791QqFok7PYupF+KxYAEjWKeS64ZYT/wgeZgPzbBas+t2DrRMnILUoEB7WP1wRxExIRWacKzUwLFj7SVYakY4nVdco2iMyRSP6SClAodUeUmeeY5OUmWEgkimT2iUqz83EhwqNQv9dDLLqP56mfifNzA6aHoJE7HRVJDFocBwpCOUFYBGTFKi+SwlmEiWZkVkgiUmOq2pkpdwleHi+8vLpHtWdxr1xu15rdUs6ijDERzDKThwCS24gTZ0gEAMj/AML5axnqxX620xWrKKnUP4Bev9C6c2kjE=</latexit>

˜

x

v

<latexit sha1_base64="A+uyhcaKnYXKh//M0GTTaXFQRzQ=">AAAB/XicbVDLSsNAFJ3UV62v+Ni5CRbBVUmsaN0V3LisYB/QhDCZTNqhk0mYmRRrCP6KGxeKuPU/3Pk3TtIgaj0wcDjnXu6Z48WUCGman1plaXllda26XtvY3Nre0Xf3eiJKOMJdFNGIDzwoMCUMdyWRFA9ijmHoUdz3Jle5359iLkjEbuUsxk4IR4wEBEGpJFc/sCWhPk7tEMqxF6R3WeZOXb1uNswCxiKxSlIHJTqu/mH7EUpCzCSiUIihZcbSSSGXBFGc1exE4BiiCRzhoaIMhlg4aZE+M46V4htBxNVj0ijUnxspDIWYhZ6azEOKv14u/ucNExm0nJSwOJGYofmhIKGGjIy8CsMnHCNJZ4pAxInKaqAx5BBJVVitKOEyx/n3lxdJ77RhNRvNm7N6u1XWUQWH4AicAAtcgDa4Bh3QBQjcg0fwDF60B+1Je9Xe5qMVrdzZB7+gvX8Bo6GWGQ==</latexit>

˜

x

u

<latexit sha1_base64="Qz8ijo3u8eXVAMok1C6FMyzVabA=">AAAB/XicbVDLSsNAFJ34rPUVHzs3g0VwVVIrWncFNy4r2Ac0IUwmk3boZBJmJmINwV9x40IRt/6HO//GSRpErQcGDufcyz1zvJhRqSzr01hYXFpeWa2sVdc3Nre2zZ3dnowSgUkXRywSAw9JwignXUUVI4NYEBR6jPS9yWXu92+JkDTiN2oaEydEI04DipHSkmvu24oyn6R2iNTYC9K7LHMT16xZdasAnCeNktRAiY5rfth+hJOQcIUZknLYsGLlpEgoihnJqnYiSYzwBI3IUFOOQiKdtEifwSOt+DCIhH5cwUL9uZGiUMpp6OnJPKT86+Xif94wUUHLSSmPE0U4nh0KEgZVBPMqoE8FwYpNNUFYUJ0V4jESCCtdWLUo4SLH2feX50nvpN5o1pvXp7V2q6yjAg7AITgGDXAO2uAKdEAXYHAPHsEzeDEejCfj1XibjS4Y5c4e+AXj/QuiHZYY</latexit>

v

<latexit sha1_base64="wYpTtEjfuznTbCRwYanHQYo4qzw=">AAAB8XicbVDLSsNAFL2pr1pfVZduBovgqiS2aN0V3LisYB/YhjKZTtqhk0mYmRRK6F+4caGIW//GnX/jJA2i1gMDh3PuZc49XsSZ0rb9aRXW1jc2t4rbpZ3dvf2D8uFRR4WxJLRNQh7KnocV5UzQtmaa014kKQ48Trve9Cb1uzMqFQvFvZ5H1A3wWDCfEayN9DAIsJ54fjJbDMsVu2pnQKvEyUkFcrSG5Y/BKCRxQIUmHCvVd+xIuwmWmhFOF6VBrGiEyRSPad9QgQOq3CRLvEBnRhkhP5TmCY0y9edGggOl5oFnJtOE6q+Xiv95/Vj7DTdhIoo1FWT5kR9zpEOUno9GTFKi+dwQTCQzWRGZYImJNiWVshKuU1x+n7xKOhdVp1at3dUrzUZeRxFO4BTOwYEraMIttKANBAQ8wjO8WMp6sl6tt+Vowcp3juEXrPcvEASRRw==</latexit>

u

<latexit sha1_base64="2fA20Io5yKS6x+nOQ6m7wA9aXkE=">AAAB8XicbVDLSsNAFL2pr1pfVZduBovgqiRWtO4KblxWsA9sQ5lMJ+3QySTMTIQQ+hduXCji1r9x5984SYOo9cDA4Zx7mXOPF3GmtG1/WqWV1bX1jfJmZWt7Z3evun/QVWEsCe2QkIey72FFORO0o5nmtB9JigOP0543u8783gOVioXiTicRdQM8EcxnBGsj3Q8DrKeen8bzUbVm1+0caJk4BalBgfao+jEchyQOqNCEY6UGjh1pN8VSM8LpvDKMFY0wmeEJHRgqcECVm+aJ5+jEKGPkh9I8oVGu/txIcaBUEnhmMkuo/nqZ+J83iLXfdFMmolhTQRYf+TFHOkTZ+WjMJCWaJ4ZgIpnJisgUS0y0KamSl3CV4eL75GXSPas7jXrj9rzWahZ1lOEIjuEUHLiEFtxAGzpAQMAjPMOLpawn69V6W4yWrGLnEH7Bev8CDn+RRg==</latexit>

f

v

<latexit sha1_base64="2nVUs6VWesxTnIyAKqoHyPJX2fQ=">AAAB6nicbVBNS8NAEJ3Ur1q/qh69LBbBU0ls0XorePFY0X5AG8pmu2mXbjZhd1MooT/BiwdFvPqLvPlv3KRB1Ppg4PHeDDPzvIgzpW370yqsrW9sbhW3Szu7e/sH5cOjjgpjSWibhDyUPQ8rypmgbc00p71IUhx4nHa96U3qd2dUKhaKBz2PqBvgsWA+I1gb6d4fzoblil21M6BV4uSkAjlaw/LHYBSSOKBCE46V6jt2pN0ES80Ip4vSIFY0wmSKx7RvqMABVW6SnbpAZ0YZIT+UpoRGmfpzIsGBUvPAM50B1hP110vF/7x+rP2GmzARxZoKslzkxxzpEKV/oxGTlGg+NwQTycytiEywxESbdEpZCNcpLr9fXiWdi6pTq9bu6pVmI4+jCCdwCufgwBU04RZa0AYCY3iEZ3ixuPVkvVpvy9aClc8cwy9Y719uno4A</latexit>

Text

Encoder

f

u

<latexit sha1_base64="/zuhxpaIXDN+q0BXHa6RoGCv3Hs=">AAAB6nicbVBNS8NAEJ3Ur1q/qh69LBbBU0msaL0VvHisaNpCG8pmu2mXbjZhdyOU0J/gxYMiXv1F3vw3btIgan0w8Hhvhpl5fsyZ0rb9aZVWVtfWN8qbla3tnd296v5BR0WJJNQlEY9kz8eKciaoq5nmtBdLikOf064/vc787gOVikXiXs9i6oV4LFjACNZGuguGybBas+t2DrRMnILUoEB7WP0YjCKShFRowrFSfceOtZdiqRnhdF4ZJIrGmEzxmPYNFTikykvzU+foxCgjFETSlNAoV39OpDhUahb6pjPEeqL+epn4n9dPdND0UibiRFNBFouChCMdoexvNGKSEs1nhmAimbkVkQmWmGiTTiUP4SrDxffLy6RzVnca9cbtea3VLOIowxEcwyk4cAktuIE2uEBgDI/wDC8Wt56sV+tt0VqyiplD+AXr/QttGo3/</latexit>

`

(u!v)

<latexit sha1_base64="yxNgwpygkrs8xqoYf7nyNiMqsFQ=">AAACAXicbVDLSsNAFJ34rPUVdSO4GSxC3ZTEitZdwY3LCvYBTSyT6aQdOpmEmUmlhLrxV9y4UMStf+HOv3GSBlHrgQuHc+7l3nu8iFGpLOvTWFhcWl5ZLawV1zc2t7bNnd2WDGOBSROHLBQdD0nCKCdNRRUjnUgQFHiMtL3RZeq3x0RIGvIbNYmIG6ABpz7FSGmpZ+47hLHbpBxDR9DBUCEhwjs4Pp72zJJVsTLAeWLnpARyNHrmh9MPcRwQrjBDUnZtK1JugoSimJFp0YkliRAeoQHpaspRQKSbZB9M4ZFW+tAPhS6uYKb+nEhQIOUk8HRngNRQ/vVS8T+vGyu/5iaUR7EiHM8W+TGDKoRpHLBPBcGKTTRBWFB9K8RDJBBWOrRiFsJFirPvl+dJ66RiVyvV69NSvZbHUQAH4BCUgQ3OQR1cgQZoAgzuwSN4Bi/Gg/FkvBpvs9YFI5/ZA79gvH8BNwKW2A==</latexit>

`

(v!u)

<latexit sha1_base64="yesXrGIjOdpYYTZLLfLlHJLtxOs=">AAACAXicbVDLSsNAFJ34rPUVdSO4GSxC3ZTEitZdwY3LCvYBTSyT6aQdOpmEmUmlhLrxV9y4UMStf+HOv3GSBlHrgQuHc+7l3nu8iFGpLOvTWFhcWl5ZLawV1zc2t7bNnd2WDGOBSROHLBQdD0nCKCdNRRUjnUgQFHiMtL3RZeq3x0RIGvIbNYmIG6ABpz7FSGmpZ+47hLHbpDyGjqCDoUJChHcwPp72zJJVsTLAeWLnpARyNHrmh9MPcRwQrjBDUnZtK1JugoSimJFp0YkliRAeoQHpaspRQKSbZB9M4ZFW+tAPhS6uYKb+nEhQIOUk8HRngNRQ/vVS8T+vGyu/5iaUR7EiHM8W+TGDKoRpHLBPBcGKTTRBWFB9K8RDJBBWOrRiFsJFirPvl+dJ66RiVyvV69NSvZbHUQAH4BCUgQ3OQR1cgQZoAgzuwSN4Bi/Gg/FkvBpvs9YFI5/ZA79gvH8BNxCW2A==</latexit>

<latexit sha1_base64="jVTJ5jwmBW2P8/jiNRcgM4SQ6Hw=">AAAB9HicbVBNS8NAFHypX7V+VT16WSyCp5JIwR4LXjxWsLbQlLLZvrRLN5uwuymU0L/hxYOCePXHePPfuGlz0NaBhWHmPd7sBIng2rjut1Pa2t7Z3SvvVw4Oj45PqqdnTzpOFcMOi0WsegHVKLjEjuFGYC9RSKNAYDeY3uV+d4ZK81g+mnmCg4iOJQ85o8ZKvh9RMwnCbLIYzobVmlt3lyCbxCtIDQq0h9UvfxSzNEJpmKBa9z03MYOMKsOZwEXFTzUmlE3pGPuWShqhHmTLzAtyZZURCWNlnzRkqf7eyGik9TwK7GSeUa97ufif109N2BxkXCapQclWh8JUEBOTvAAy4gqZEXNLKFPcZiVsQhVlxtZUsSV461/eJN2buteoe95Do9ZqFn2U4QIu4Ro8uIUW3EMbOsAggWd4hTcndV6cd+djNVpyip1z+APn8wcSOJIk</latexit>

h

v

<latexit sha1_base64="Gk/nLwtipW+47R3v6rtxOFnsCpc=">AAAB9HicbVDLSgMxFL1TX7W+qi7dBIvgqsyIYJcFNy4rWFvoDCWTZtrQJDPkIZShv+HGhYK49WPc+Tdm2llo64HA4Zx7uScnzjjTxve/vcrG5tb2TnW3trd/cHhUPz551KlVhHZJylPVj7GmnEnaNcxw2s8UxSLmtBdPbwu/90SVZql8MLOMRgKPJUsYwcZJYSiwmcRJPpkP7bDe8Jv+AmidBCVpQInOsP4VjlJiBZWGcKz1IPAzE+VYGUY4nddCq2mGyRSP6cBRiQXVUb7IPEcXThmhJFXuSYMW6u+NHAutZyJ2k0VGveoV4n/ewJqkFeVMZtZQSZaHEsuRSVFRABoxRYnhM0cwUcxlRWSCFSbG1VRzJQSrX14nvatmcN0MgvvrRrtV9lGFMziHSwjgBtpwBx3oAoEMnuEV3jzrvXjv3sdytOKVO6fwB97nDxCzkiM=</latexit>

h

u

Figure 2: Overview of our ConVIRT framework. The blue and green shades represent

the image and text encoding pipelines, respectively. Our method relies on maximizing the

agreement between the true image-text representation pairs with bidirectional losses ℓ

(v→u)

and ℓ

(u→v)

.

h

v

, followed by a non-linear projection g

v

which further transforms h

v

into vector v:

v = g

v

(f

v

(

˜

x

v

)), (1)

where v ∈ R

d

. Similarly, for each text input x

u

, we obtain a span

˜

x

u

from it following a

sampling function t

u

, and then a text representation u with: u = g

u

(f

u

(

˜

x

u

)), where f

u

is

a text encoder, g

u

a projection, and u ∈ R

d

. The projection functions g

v

and g

u

project

representations for both modalities from their encoder space to the same d-dimensional

space for contrastive learning.

At training time, we sample a minibatch of N input pairs (x

v

, x

u

) from training data,

and calculate their representation pairs (v, u). We use (v

i

, u

i

) to denote the i-th pair.

The training objective of ConVIRT involves two loss functions. The first loss function is an

image-to-text contrastive loss for the i-th pair:

ℓ

(v→u)

i

= − log

exp(⟨v

i

, u

i

⟩/τ)

P

N

k=1

exp(⟨v

i

, u

k

⟩/τ)

, (2)

where ⟨v

i

, u

i

⟩ represents the cosine similarity, i.e., ⟨v, u⟩ = v

⊤

u/∥v∥∥u∥; and τ ∈ R

+

represents a temperature parameter. This loss takes the same form as the InfoNCE loss

(Oord et al., 2018), and minimizing it leads to encoders that maximally preserve the mutual

information between the true pairs under the representation functions. Intuitively, it is the

log loss of an N-way classifier that tries to predict (v

i

, u

i

) as the true pair. Note that

unlike previous work which use a contrastive loss between inputs of the same modality

(Chen et al., 2020a; He et al., 2020), our image-to-text contrastive loss is asymmetric for

each input modality. We therefore define a similar text-to-image contrastive loss as:

ℓ

(u→v)

i

= − log

exp(⟨u

i

, v

i

⟩/τ)

P

N

k=1

exp(⟨u

i

, v

k

⟩/τ)

. (3)

6

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Our final training loss is then computed as a weighted combination of the two losses averaged

over all positive image-text pairs in each minibatch:

L =

1

N

N

X

i=1

λℓ

(v→u)

i

+ (1 − λ)ℓ

(u→v)

i

, (4)

where λ ∈ [0, 1] is a scalar weight.

3.3. Realization

We note that our ConVIRT framework defined above is agnostic to the specific choice of

image and text encoders, transformations and projection functions. Following previous work

(Chen et al., 2020a), we model g

v

and g

u

as separate learnable single-hidden-layer neural

networks, i.e., g

v

(·) = W

(2)

σ(W

(1)

(·)) where σ is a ReLU non-linearity, and similarly for

g

u

.

For the image encoder f

v

, we use the ResNet50 architecture (He et al., 2016) for all

experiments, as it is the architecture of choice for much medical imaging work and is shown

to achieve competitive performance. For the text encoder f

u

, we use a BERT encoder

(Devlin et al., 2019) followed by a max-pooling layer over all output vectors. We initialize

our encoder with the ClinicalBERT weights (Alsentzer et al., 2019) pretrained on the MIMIC

clinical notes, which achieved state-of-the-art performance on a suite of clinical NLP tasks.

At training time we allow the encoder to adapt to our contrastive task by freezing the

embeddings and the first 6 transformer layers of this BERT encoder and fine-tuning the

last 6 layers.

For the image transformation family T where t

v

is sampled from, we use sequential

applications of five random transformations: cropping, horizontal flipping, affine transfor-

mation, color jittering and Gaussian blur. Different from recent work on contrastive visual

learning (Chen et al., 2020a,b), we only apply brightness and contrast adjustments in color

jittering, due to the monochrome nature of the medical images. For the text transformation

function t

u

, we apply a simple uniform sampling of a sentence from the input document x

u

(i.e.,

˜

x

u

is a randomly sampled sentence from x

u

for each minibatch). We did not use a more

aggressive transformation mainly because sampling at the sentence level helps preserve the

semantic meaning of the sampled spans.

An alternative method to using the sampled view

˜

x

v

from x

v

as input to the encoder

is to directly use x

v

or to fuse all images for each study in the case of multiple available

x

v

instances (e.g., images from multiple angles). We empirically found in our preliminary

experiments that using sampled view

˜

x

v

leads to better pretraining results. We conjecture

that we can treat the use of

˜

x

v

as a way of data augmentation for the visual modality,

which helped increase the effective amount of unique image-text pairs that the model sees

at pretraining time, leading to better performance.

4. Experiments

We now introduce the paired datasets that we used for contrastive pretraining, the down-

stream tasks and datasets for evaluation, and the baseline methods that we compare against.

7

Contrastive Learning of Medical Visual Representations from Paired Images and Text

4.1. Data for Pretraining

We evaluate ConVIRT by pretraining two separate image encoders using two separate

image-text datasets (see Appendix A for full pretraining details):

• Chest image encoder: We use version 2 of the public MIMIC-CXR database (Johnson

et al., 2019), which is a collection of chest radiograph images paired with their textual

reports, and since its release has become a standard resource for studying multi-modal

modeling of medical images. After preprocessing, this dataset contains a total of about

217k image-text pairs, with each pair containing an average of 1.7 images and 6.0 sen-

tences.

• Bone image encoder: We obtain a collection of musculoskeletal (i.e., bone) image-text

pairs from the Rhode Island Hospital system. Following chest, musculoskeletal images

constitute the second most common type of radiograph images in a typical hospital. This

dataset contains a total of 48k image-text pairs, with each pair containing an average of

2.5 images and 8.0 sentences.

4.2. Evaluation Tasks & Data

We evaluate our pretrained image encoders on three medical imaging tasks: image classifi-

cation, zero-shot image-image retrieval and zero-shot text-image retrieval.

Image Classification. We evaluate our pretrained image encoders on four representative

medical image classification tasks: 1) RSNA Pneumonia Detection (Wang et al., 2017;

Shih et al., 2019), which involves binary classification of a chest radiograph image into ei-

ther a pneumonia or a normal category; 2) CheXpert image classification (Irvin et al.,

2019), which involves multi-label binary classification of a chest image for five individual la-

bels, i.e., atelectasis, cardiomegaly, consolidation, edema and pleural effusion; 3) COVIDx

(Wang and Wong, 2020), which involves multi-class chest image classification into three cat-

egories (COVID19, non-COVID pneumonia or normal); and 4) MURA bony abnormality

detection (Rajpurkar et al., 2018a), which involves binary classification of a musculoskeletal

image into abnormal or normal. We report test accuracy for COVIDx given its balanced

test set, and report the standard area under the receiver operating characteristic curve

(AUC) metric for other tasks.

Following previous work (H´enaff et al., 2020; Chen et al., 2020a; He et al., 2020), for all

tasks, we evaluate each pretrained image encoder under two settings: a linear classifica-

tion setting, where the pretrained CNN weights are frozen and only a linear classification

head is trained for the task; and a fine-tuning setting, where both the CNN weights and

the linear head are fine-tuned. The two settings complement each other for evaluation pur-

poses: while the linear setting directly evaluates the quality of the extracted image features

with the pretrained CNN, the fine-tuning setting more closely resembles how the pretrained

CNN weights are used in practical applications.

To further compare the data efficiency of different pretraining methods, for each set-

ting we evaluate the image encoders with 1%, 10% and all training data, respectively

(except for the COVIDx task where we omit the 1% setting due to the scarcity of training

data). To control the variance in results, for all settings and models, we report average

8

Contrastive Learning of Medical Visual Representations from Paired Images and Text

results over 5 independent training runs. We include further dataset and training details

in Appendix B.

Zero-shot Image-image Retrieval. This evaluation is similar to the conventional content-

based image retrieval setting in which we search for images of a particular category using a

representative query image. For evaluation, a group of query images and a larger collection

of candidate images, each with a categorical label, are given to a pretrained CNN encoder.

We encode each query and candidate image with this encoder, and then for each query,

rank all candidates by their cosine similarities to the query in descending order. Since a

widely-used annotated benchmark for this setting is not available, we create our own dataset

by re-using existing annotations in the CheXpert dataset (Irvin et al., 2019) and additional

expert annotations from a board-certified radiologist. The resulting dataset covers 8 differ-

ent chest abnormality categories, each with 10 expert-annotated query and 200 candidate

images. We include the detailed collection and annotation procedure in Appendix C, and

refer to this dataset as CheXpert 8×200 Retrieval Dataset. We focus our evaluation on

retrieval precision, and evaluate our models with Precision@k metrics where k = 5, 10, 100.

Zero-shot Text-image Retrieval. This setting is similar to the image-image retrieval

setting, but instead of using query images, we retrieve images of a particular category with

textual queries. For this purpose, we ask a radiologist to write 5 diverse and representative

textual descriptions for each of the 8 abnormality categories for the same CheXpert 8x200

candidate images (see Appendix D for details). At test time, for each query we encode its

text with the learned text encoder f

u

and then retrieve from candidate images in a similar

way. This evaluation not only evaluates the quality of the learned image representations,

but also the alignment between the text representations and the image representations. We

again use Precision@k metrics where k = 5, 10, 100.

4.3. Baseline Methods

We compare ConVIRT against the following standard or competitive initialization methods:

• Random Init.: For all tasks we initialize the ResNet50 with its default random initial-

ization.

• ImageNet Init.: We use CNN weights pretrained on ImageNet (Russakovsky et al.,

2015), which remains a dominant initialization approach for medical imaging work (Raghu

et al., 2019).

• Caption-LSTM: We further pretrain the ImageNet-initialized CNN weights with an

image captioning task using the standard CNN-LSTM with attention model (Xu et al.,

2015). We train the model to decode the paired medical report text from the encoded

image representations. Compared to the random or ImageNet initializations, this is an

“in-domain” initialization baseline which uses the paired text data for representation

learning.

• Caption-Transformer: We use a CNN-Transformer-based captioning model (Cornia

et al., 2020) for caption-based pretraining, which recently achieves state-of-the-art results

on the COCO image captioning benchmark (Lin et al., 2014).

9

Contrastive Learning of Medical Visual Representations from Paired Images and Text

• Contrastive-Binary-Loss: This baseline differs from ConVIRT by contrasting the

paired image and text representations with a binary classification head, as is widely

done in visual-linguistic pretraining work (Tan and Bansal, 2019; Su et al., 2020). For

each input pair, we first project encoder outputs h

v

and h

u

into the same dimension with

linear layers, concatenate them, and use a MLP network to predict a binary probability

of whether the input is a real or a “fake” pair, which we train with a binary cross-entropy

loss. During training, for each (x

v

, x

u

) pair in the training set, we construct a “fake”

pair by replacing x

u

with a randomly sampled one from the dataset. We expect that the

binary classification task requires the encoder to learn reasonable representations of the

input images, and therefore is a stronger in-domain initialization baseline.

For fair comparison, for all baselines that require paired image-text data, we use the

same datasets as in our contrastive pretraining. For the captioning-based methods, we

always use the model checkpoints that achieve the best CIDEr score (Vedantam et al.,

2015) on a held-out validation set.

5. Results

5.1. Classification Tasks

Linear Classification. We present all linear classification results for the four tasks in

Table 1(a). We find that compared to random initialization, ImageNet initialization provides

markedly better representations, despite pretrained on a very different domain of images; in-

domain image initialization methods that use paired image-text data further improve over

ImageNet initialization in almost all settings. Among the in-domain initialization methods,

our proposed ConVIRT pretraining achieves the best overall results in all settings. Notably,

we find on three out of the four tasks, with only 1% training data ConVIRT is able to achieve

classification results better than the default ImageNet initialization with 100% training data,

highlighting the high quality of the learned representations from ConVIRT.

Fine-tuning. We show the fine-tuning evaluation results in Table 1(b). Similar to the

linear setting, we find that: 1) ImageNet initialization is again better than random initial-

ization with smaller margins; 2) all in-domain initialization methods are better than the

popular ImageNet initialization in most settings; and 3) our proposed ConVIRT pretraining

again achieves the best overall results in 10 out of the 11 settings, with the exception of

the CheXpert dataset with all training data used, where the result of ConVIRT is similar

to that of the Caption-Transformer result. Most notably, on all datasets, with only 10%

labeled training data ConVIRT achieves classification results that are better or close to the

ImageNet initialization with 100% training data results.

We also notice that our conclusion of using ImageNet versus random initialization is

different from (Raghu et al., 2019): while they showed comparable results from the two

strategies, we find that using ImageNet initialization is still superior than random initial-

ization in most results, justifying its popularity. Upon closer examination, we conjecture

that this is likely due to under-optimization of their models: while our ResNet50 with ran-

dom initialization achieves an average AUC of 85.8 on the CheXpert dataset, their ResNet50

model only achieved 83.5 AUC on the same evaluation set.

10

Contrastive Learning of Medical Visual Representations from Paired Images and Text

(a) Linear classification

RSNA (AUC) CheXpert (AUC) COVIDx (Accu.) MURA (AUC)

Method 1% 10% all 1% 10% all 10% all 1% 10% all

General initialization methods

Random Init. 55.0 67.3 72.3 58.2 63.7 66.2 69.2 73.5 50.9 56.8 62.0

ImageNet Init. 82.8 85.4 86.9 75.7 79.7 81.0 83.7 88.6 63.8 74.1 79.0

In-domain initialization methods

Caption-Transformer 84.8 87.5 89.5 77.2 82.6 83.9 80.0 89.0 66.5 76.3 81.8

Caption-LSTM 89.8 90.8 91.3 85.2 85.3 86.2 84.5 91.7 75.2 81.5 84.1

Contrastive-Binary-Loss 88.9 90.5 90.8 84.5 85.6 85.8 80.5 90.8 76.8 81.7 85.3

ConVIRT (Ours) 90.7 91.7 92.1 85.9 86.8 87.3 85.9 91.7 81.2 85.1 87.6

(b) Fine-tuning

RSNA (AUC) CheXpert (AUC) COVIDx (Accu.) MURA (AUC)

Method 1% 10% all 1% 10% all 10% all 1% 10% all

General initialization methods

Random Init. 71.9 82.2 88.5 70.4 81.1 85.8 75.4 87.7 56.8 61.6 79.1

ImageNet Init. 83.1 87.3 90.8 80.1 84.8 87.6 84.4 90.3 72.1 81.8 87.0

In-domain initialization methods

Caption-Transformer 86.3 89.2 92.1 81.5 86.4 88.2 88.3 92.3 75.2 83.2 87.6

Caption-LSTM 87.2 88.0 91.0 83.5 85.8 87.8 83.8 90.8 78.7 83.3 87.8

Contrastive-Binary-Loss 87.7 89.9 91.2 86.2 86.1 87.7 89.5 90.5 80.6 84.0 88.4

ConVIRT (Ours) 88.8 91.5 92.7 87.0 88.1 88.1 90.3 92.4 81.3 86.5 89.0

Table 1: Results for the medical image classification tasks: (a) linear classification; (b)

fine-tuning setting. All results are averaged over 5 independent models. Best results for

each setting are in boldface. COVIDx 1% setting is omitted due to the scarcity of labels in

COVIDx.

5.2. Retrieval Tasks

We present the zero-shot image-image and text-image retrieval results in Table 2. For the

image-image retrieval setting, we present additional results from fine-tuning our pretrained

model on all CheXpert training data, and use them as “upper bounds” of the results ob-

tained from the use of supervised labels. We find that: 1) using ImageNet weights in a

zero-shot image retrieval setting is only better than random guess by small margins; 2) all

in-domain pretrained CNN weights achieve much better retrieval performance than Image-

Net weights; and 3) our proposed ConVIRT pretraining achieves the best overall retrieval

results on all metrics. While Contrastive-Binary-Loss performs notably better than other

baselines in image-image retrieval, its text-image retrieval results are far from ConVIRT

pretraining. We conjecture that the lack of an explicit similarity-based loss function in

the Contrastive-Binary-Loss baseline results in misaligned representations in the image and

text space, leading to poor results in text-image retrieval.

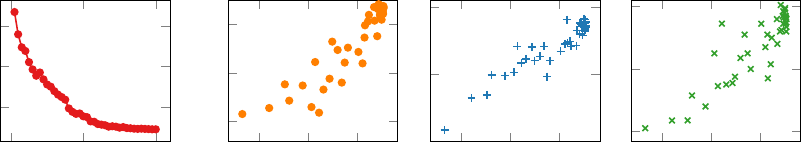

To understand how well ConVIRT pretraining helps separate images from different ab-

normality categories in its encoding space, in Figure 3 we present t-SNE plots (Maaten and

Hinton, 2008) of candidate images in the CheXpert 8x200 dataset for five selected categories,

from the ImageNet pretrained CNN encoder and the ConVIRT pretrained encoder. It is

worth noting that clustering images in our setting is much more challenging than that in

11

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Image-Image Retrieval Text-Image Retrieval

Method Prec@5 Prec@10 Prec@50 Prec@5 Prec@10 Prec@50

Random 12.5 12.5 12.5 12.5 12.5 12.5

ImageNet 14.8 14.4 15.0 – – –

In-domain initialization methods

Caption-Transformer 29.8 28.0 23.0 – – –

Caption-LSTM 34.8 32.9 28.1 – – –

Contrastive-Binary-Loss 38.8 36.6 29.7 15.5 14.5 13.7

ConVIRT (Ours) 45.0 42.9 35.7 60.0 57.5 48.8

Fine-tuned

ConVIRT + CheXpert Supervised 56.8 56.3 48.9 – – –

Table 2: Zero-shot image-image and text-image retrieval results on the CheXpert 8×200

datasets. Random shows results from a random guess; ConVIRT + CheXpert Supervised

shows results from further fine-tuning the pretrained weights with supervised training data.

Text-image retrieval results are not obtained for some methods due to the lack of text

encoders.

(a) ImageNet Pretraining (b) ConVIRT Pretraining

Figure 3: t-SNE visualizations of encoded image representations from different pretraining

methods.

the general object classification setting due to the high inter-class similarity of the medical

images. Nevertheless we find that ConVIRT pretraining achieves a better clustering of the

images in the t-SNE plots.

6. Analysis and Discussion

Comparisons to Image-only Contrastive Learning. ConVIRT shows superior results

against baselines in evaluation, but an important question remains as to how it compares

against existing image-only contrastive learning methods. We study this by running two

popular such methods, SimCLR (Chen et al., 2020a) and MoCo v2 (Chen et al., 2020b),

on the same collection of images that we used in our pretraining. We present the results in

Table 3 and include training details in Appendix E. We find that compared to ImageNet

initialization, both contrastive methods lead to marginal to moderate improvements on the

12

Contrastive Learning of Medical Visual Representations from Paired Images and Text

RSNA CheXpert Image-Image

Method (Linear, 1%) (Linear, 1%) (Prec@10)

ImageNet 82.8 75.7 14.4

SimCLR (Chen et al., 2020a) 86.3 77.4 17.6

MoCo v2 (Chen et al., 2020b) 86.6 81.3 20.6

ConVIRT 90.7 85.9 42.9

Table 3: Comparisons of ConVIRT to image-only contrastive learning. For RSNA and

CheXpert we show the AUC under linear classification with 1% training data.

Figure 4: Saliency maps on sampled images for 4 abnormality categories in the CheXpert

dataset. For each image we present maps for ImageNet, SimCLR, MoCo v2 and our Con-

VIRT initializations. Ground truth regions that are indicative of the abnormalities are

shown as red boxes in the original images on the right, and are seen to most closely match

the regions found by ConVIRT.

classification and retrieval tasks. However, our training strategy substantially outperforms

both methods on all tasks, demonstrating its effective use of information from the paired

text data. This efficient use of data is critical to the healthcare domain because medical

data are often limited in size but come with paired text data and even user metadata.

13

Contrastive Learning of Medical Visual Representations from Paired Images and Text

0 100 200

2.4

2.6

2.8

(a) Pretraining Loss

−2.8 −2.6 −2.4

89.5

90

90.5

(b) RSNA Linear

(1%, AUC)

−2.8 −2.6 −2.4

25

35

45

(c) Image-image

(P@10)

−2.8 −2.6 −2.4

40

50

60

(d) Text-image

(P@10)

Figure 5: (a) shows pretraining validation loss at different epochs; (b)-(d) shows correlation

between the pretraining loss and the performance of three end tasks. For (a) the x-axis

shows the training epoch number, and for (b)-(d) the x-axis shows the negative value of the

pretraining loss (i.e., −L) on a held-out validation set.

To understand the representational difference that has led to this difference in per-

formance, for all four initialization methods, we visualize in Figure 4 the saliency maps

(Simonyan et al., 2014) corresponding to the correct class on sampled images from the

CheXpert dataset. Models for all initialization methods are trained with 1% CheXpert

training data under the linear classification setting (with pretrained CNN weights frozen).

We find that ImageNet pretraining has led to models that focus on trivial visual features

that are mostly irrelevant to the task, and that the model with ConVIRT pretrained weights

has focused on much more relevant areas than those with SimCLR and MoCo v2 pretrain-

ing, suggesting more effective representation learning. For example, for atelectasis, while

the ConVIRT model has correctly focused on the bottom of the lung regions, the SimCLR

model has much more scattered focus and the MoCo model has incorrectly focused on the

heart region.

Correlation Between Contrastive Loss and End Task Performance. To under-

stand the relation between a model’s performance on the ConVIRT pretraining task and

its performance on the downstream tasks, we ran an analysis where for every 5 epochs

during the pretraining, we transferred the pretrained checkpoint to the downstream tasks

and evaluate its performance. The pretraining was run for a total of 200 epochs, and 40

points were obtained with varying validation loss and end task results. Figure 5 presents

the results of the models’ validation loss on the pretraining task, and its achieved perfor-

mance on the RSNA 1% data linear evaluation and the two retrieval tasks. For all three

tasks, we find a clear positive correlation between the pretraining performance and the end

task performance. This corroborates that by learning with the ConVIRT objectives, the

image encoder learns gradually improved representations for the end tasks, and suggests

that further improvement on the pretraining task may have positive impact on the end task

performance.

Hyperparameter Analysis. We run experiments to study the impact of hyperparame-

ters, and have the following observations. First of all, similar to previous work on image-only

contrastive learning (Chen et al., 2020a; He et al., 2020), the pretraining results are most

14

Contrastive Learning of Medical Visual Representations from Paired Images and Text

RSNA Linear Image-Image Text-Image

Settings (1%, AUC) (Prec@10) (Prec@10)

ConVIRT (default) 90.7 42.9 57.5

τ = 0.01 90.7 40.5 21.0

τ = 1 89.6 25.0 31.0

bs = 16 90.3 40.0 55.8

bs = 128 90.3 39.3 50.3

linear proj. 90.6 40.8 55.8

Table 4: Evaluation results with different hyperparameters, for the RSNA 1% data linear

evaluation, image-image retrieval and text-image retrieval tasks. bs represents batch size

and linear proj. represents using linear projection layers for g

v

and g

u

. Our default model

uses τ = 0.1, bs = 32 and non-linear projections.

sensitive to the choice of the temperature value τ. As shown in Table 4, using a temperature

much lower than the ideal value (τ = 0.01) hurts the retrieval results, and a temperature

much larger (τ = 1) notably hurts the performance on all tasks. Second, unlike previous

work, changing batch size does not lead to substantial change in the classification results.

At last, replacing the non-linear projection heads in g

v

and g

u

with linear layers hurts the

retrieval results moderately, suggesting worse representations. However, this is again not

reflected notably in the RSNA classification results.

Limitations. This work mainly focuses on comparing ConVIRT against conventional

ImageNet initialization, image captioning-based initialization, and image-only contrastive

learning approaches including SimCLR and MoCo to demonstrate the data efficiency and

effectiveness of image-text pretraining. We did not compare our method against relevant

subsequent studies that extended ConVIRT, such as LoVT (M¨uller et al., 2021) or GloRIA

(Huang et al., 2021), mainly because such comparisons are included in these studies.

7. Conclusion

We presented ConVIRT, an unsupervised method for learning medical visual representa-

tions from paired descriptive text. Our method relies on contrasting the image repre-

sentations with the paired descriptive text via a bidirectional objective between the two

modalities. On 4 medical image classification tasks and 2 image retrieval tasks, ConVIRT

outperformed other strong in-domain initialization methods, and led to representations with

notably higher quality. Compared to ImageNet pretraining, ConVIRT is able to achieve

the same level of classification accuracy with an order of magnitude less labeled data. This

is especially critical for the healthcare domain where data sparsity is an important issue,

and the innovative cross-modality pretraining in ConVIRT is extensible to consider other

modalities of data in this domain. We thus hope that ConVIRT continues inspiring future

work that makes more efficient use of multi-modal data for medical image understanding.

15

Contrastive Learning of Medical Visual Representations from Paired Images and Text

References

Michael David Abr`amoff, Yiyue Lou, Ali Erginay, Warren Clarida, Ryan Amelon, James C

Folk, and Meindert Niemeijer. Improved automated detection of diabetic retinopathy on

a publicly available dataset through integration of deep learning. Investigative Ophthal-

mology & Visual Science, 57(13):5200–5206, 2016.

Emily Alsentzer, John Murphy, William Boag, Wei-Hung Weng, Di Jindi, Tristan Naumann,

and Matthew McDermott. Publicly available clinical BERT embeddings. In Proceedings

of the 2nd Clinical Natural Language Processing Workshop, 2019.

Shekoofeh Azizi, Basil Mustafa, Fiona Ryan, Zachary Beaver, Jan Freyberg, Jonathan

Deaton, Aaron Loh, Alan Karthikesalingam, Simon Kornblith, Ting Chen, et al. Big self-

supervised models advance medical image classification. In Proceedings of the IEEE/CVF

International Conference on Computer Vision (ICCV), 2021.

Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple frame-

work for contrastive learning of visual representations. In International Conference on

Machine Learning (ICML), 2020a.

Xinlei Chen, Haoqi Fan, Ross Girshick, and Kaiming He. Improved baselines with momen-

tum contrastive learning. arXiv preprint arXiv:2003.04297, 2020b.

Marcella Cornia, Matteo Stefanini, Lorenzo Baraldi, and Rita Cucchiara. Meshed-memory

Transformer for image captioning. In Proceedings of the IEEE/CVF Conference on Com-

puter Vision and Pattern Recognition (CVPR), 2020.

Jeffrey De Fauw, Joseph R Ledsam, Bernardino Romera-Paredes, Stanislav Nikolov, Nenad

Tomasev, Sam Blackwell, Harry Askham, Xavier Glorot, Brendan O’Donoghue, Daniel

Visentin, et al. Clinically applicable deep learning for diagnosis and referral in retinal

disease. Nature Medicine, 24(9):1342–1350, 2018.

Dina Demner-Fushman, Marc D Kohli, Marc B Rosenman, Sonya E Shooshan, Laritza

Rodriguez, Sameer Antani, George R Thoma, and Clement J McDonald. Preparing a

collection of radiology examinations for distribution and retrieval. Journal of the Amer-

ican Medical Informatics Association, 23(2):304–310, 2016.

Karan Desai and Justin Johnson. VirTex: Learning visual representations from textual

annotations. In Proceedings of the IEEE Conference on Computer Vision and Pattern

Recognition (CVPR), 2021.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: Pre-training

of deep bidirectional transformers for language understanding. In Proceedings of the

2019 Conference of the North American Chapter of the Association for Computational

Linguistics: Human Language Technologies (NAACL-HLT), 2019.

Sedigheh Eslami, Gerard de Melo, and Christoph Meinel. Does CLIP benefit visual question

answering in the medical domain as much as it does in the general domain? arXiv preprint

arXiv:2112.13906, 2021.

16

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Andre Esteva, Brett Kuprel, Roberto A Novoa, Justin Ko, Susan M Swetter, Helen M Blau,

and Sebastian Thrun. Dermatologist-level classification of skin cancer with deep neural

networks. Nature, 542(7639):115–118, 2017.

Jean-Bastien Grill, Florian Strub, Florent Altch´e, Corentin Tallec, Pierre Richemond, Elena

Buchatskaya, Carl Doersch, Bernardo Avila Pires, Zhaohan Guo, Mohammad Ghesh-

laghi Azar, Bilal Piot, Koray Kavukcuoglu, Remi Munos, and Michal Valko. Bootstrap

your own latent: A new approach to self-supervised learning. In Advances in Neural

Information Processing Systems, 2020.

Varun Gulshan, Lily Peng, Marc Coram, Martin C Stumpe, Derek Wu, Arunacha-

lam Narayanaswamy, Subhashini Venugopalan, Kasumi Widner, Tom Madams, Jorge

Cuadros, et al. Development and validation of a deep learning algorithm for detection of

diabetic retinopathy in retinal fundus photographs. JAMA, 316(22):2402–2410, 2016.

Tanmay Gupta, Arash Vahdat, Gal Chechik, Xiaodong Yang, Jan Kautz, and Derek Hoiem.

Contrastive learning for weakly supervised phrase grounding. In Proceedings of the 16th

European Conference on Computer Vision (ECCV), 2020.

Yan Han, Chongyan Chen, Ahmed Tewfik, Ying Ding, and Yifan Peng. Pneumonia detec-

tion on chest x-ray using radiomic features and contrastive learning. In 2021 IEEE 18th

International Symposium on Biomedical Imaging (ISBI). IEEE, 2021.

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for

image recognition. In Proceedings of the IEEE Conference on Computer Vision and

Pattern Recognition (CVPR), 2016.

Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. Momentum contrast for

unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference

on Computer Vision and Pattern Recognition (CVPR), 2020.

Lars Heiliger, Anjany Sekuboyina, Bjoern Menze, Jan Egger, and Jens Kleesiek. Beyond

medical imaging: A review of multimodal deep learning in radiology. TechRxiv preprint,

2022.

Olivier J H´enaff, Aravind Srinivas, Jeffrey De Fauw, Ali Razavi, Carl Doersch, SM Eslami,

and Aaron van den Oord. Data-efficient image recognition with contrastive predictive

coding. In International Conference on Machine Learning (ICML), 2020.

Shih-Cheng Huang, Liyue Shen, Matthew P Lungren, and Serena Yeung. GLoRIA: A mul-

timodal global-local representation learning framework for label-efficient medical image

recognition. In Proceedings of the IEEE/CVF International Conference on Computer

Vision (ICCV), 2021.

Gabriel Ilharco, Rowan Zellers, Ali Farhadi, and Hannaneh Hajishirzi. Probing contextual

language models for common ground with visual representations. In Proceedings of the

2021 Conference of the North American Chapter of the Association for Computational

Linguistics: Human Language Technologies (NAACL-HLT), 2021.

17

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Jeremy Irvin, Pranav Rajpurkar, Michael Ko, Yifan Yu, Silviana Ciurea-Ilcus, Chris Chute,

Henrik Marklund, Behzad Haghgoo, Robyn Ball, Katie Shpanskaya, et al. CheXpert:

A large chest radiograph dataset with uncertainty labels and expert comparison. In

Proceedings of the AAAI Conference on Artificial Intelligence, 2019.

Chao Jia, Yinfei Yang, Ye Xia, Yi-Ting Chen, Zarana Parekh, Hieu Pham, Quoc Le, Yun-

Hsuan Sung, Zhen Li, and Tom Duerig. Scaling up visual and vision-language repre-

sentation learning with noisy text supervision. In Proceedings of the 38th International

Conference on Machine Learning, 2021.

Baoyu Jing, Pengtao Xie, and Eric Xing. On the automatic generation of medical imaging

reports. In Proceedings of the 56th Annual Meeting of the Association for Computational

Linguistics (ACL), 2018.

Alistair EW Johnson, Tom J Pollard, Seth J Berkowitz, Nathaniel R Greenbaum,

Matthew P Lungren, Chih-ying Deng, Roger G Mark, and Steven Horng. MIMIC-CXR,

a de-identified publicly available database of chest radiographs with free-text reports.

Scientific Data, 6, 2019.

Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In The

2015 International Conference for Learning Representations, 2015.

Ruizhi Liao, Daniel Moyer, Miriam Cha, Keegan Quigley, Seth Berkowitz, Steven Horng,

Polina Golland, and William M Wells. Multimodal representation learning via maxi-

mization of local mutual information. In International Conference on Medical Image

Computing and Computer-Assisted Intervention, 2021.

Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan,

Piotr Doll´ar, and C Lawrence Zitnick. Microsoft COCO: Common objects in context. In

European Conference on Computer Vision (ECCV), 2014.

Guanxiong Liu, Tzu-Ming Harry Hsu, Matthew McDermott, Willie Boag, Wei-Hung Weng,

Peter Szolovits, and Marzyeh Ghassemi. Clinically accurate chest X-ray report generation.

In Machine Learning for Healthcare Conference, 2019.

Jiasen Lu, Dhruv Batra, Devi Parikh, and Stefan Lee. ViLBERT: Pretraining task-agnostic

visiolinguistic representations for vision-and-language tasks. In Advances in Neural In-

formation Processing Systems, 2019.

Laurens van der Maaten and Geoffrey Hinton. Visualizing data using t-SNE. Journal of

Machine Learning Research, 9(Nov):2579–2605, 2008.

Christopher D. Manning, Mihai Surdeanu, John Bauer, Jenny Finkel, Steven J. Bethard,

and David McClosky. The Stanford CoreNLP natural language processing toolkit. In

Association for Computational Linguistics (ACL) System Demonstrations, 2014.

Yasuhide Miura, Yuhao Zhang, Curtis P. Langlotz, and Dan Jurafsky. Improving factual

completeness and consistency of image-to-text radiology report generation. In Proceedings

of the 2021 Conference of the North American Chapter of the Association for Computa-

tional Linguistics: Human Language Technologies (NAACL-HLT), 2021.

18

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Philip M¨uller, Georgios Kaissis, Congyu Zou, and Daniel R¨uckert. Joint learning of localized

representations from medical images and reports. arXiv preprint arXiv:2112.02889, 2021.

Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive

predictive coding. arXiv preprint arXiv:1807.03748, 2018.

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini

Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning

transferable visual models from natural language supervision. In International Conference

on Machine Learning, 2021.

Maithra Raghu, Chiyuan Zhang, Jon Kleinberg, and Samy Bengio. Transfusion: Under-

standing transfer learning for medical imaging. In Advances in Neural Information Pro-

cessing Systems, 2019.

Pranav Rajpurkar, Jeremy Irvin, Aarti Bagul, Daisy Ding, Tony Duan, Hershel Mehta,

Brandon Yang, Kaylie Zhu, Dillon Laird, Robyn L Ball, et al. MURA: Large dataset

for abnormality detection in musculoskeletal radiographs. In 1st Conference on Medical

Imaging with Deep Learning (MIDL), 2018a.

Pranav Rajpurkar, Jeremy Irvin, Robyn L Ball, Kaylie Zhu, Brandon Yang, Hershel Mehta,

Tony Duan, Daisy Ding, Aarti Bagul, Curtis P Langlotz, et al. Deep learning for chest ra-

diograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing

radiologists. PLoS Medicine, 15(11):e1002686, 2018b.

Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhi-

heng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. ImageNet large

scale visual recognition challenge. International Journal of Computer Vision, 115(3):

211–252, 2015.

Mert Bulent Sariyildiz, Julien Perez, and Diane Larlus. Learning visual representations

with caption annotations. In Proceedings of the 16th European Conference on Computer

Vision (ECCV), 2020.

George Shih, Carol C Wu, Safwan S Halabi, Marc D Kohli, Luciano M Prevedello, Tessa S

Cook, Arjun Sharma, Judith K Amorosa, Veronica Arteaga, Maya Galperin-Aizenberg,

et al. Augmenting the National Institutes of Health chest radiograph dataset with expert

annotations of possible pneumonia. Radiology: Artificial Intelligence, 1(1):e180041, 2019.

Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman. Deep inside convolutional net-

works: Visualising image classification models and saliency maps. In ICLR Workshop,

2014.

Hari Sowrirajan, Jingbo Yang, Andrew Y Ng, and Pranav Rajpurkar. MoCo pretraining

improves representation and transferability of chest X-ray models. In Medical Imaging

with Deep Learning, pages 728–744. PMLR, 2021.

Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, and Jifeng Dai. VL-BERT:

Pre-training of generic visual-linguistic representations. In International Conference on

Learning Representations (ICLR), 2020.

19

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Hao Tan and Mohit Bansal. LXMERT: Learning cross-modality encoder representations

from transformers. In Proceedings of the 2019 Conference on Empirical Methods in Natu-

ral Language Processing and the 9th International Joint Conference on Natural Language

Processing (EMNLP-IJCNLP), 2019.

Ramakrishna Vedantam, C Lawrence Zitnick, and Devi Parikh. CIDEr: Consensus-based

image description evaluation. In Proceedings of the IEEE Conference on Computer Vision

and Pattern Recognition (CVPR), 2015.

Yen Nhi Truong Vu, Richard Wang, Niranjan Balachandar, Can Liu, Andrew Y Ng, and

Pranav Rajpurkar. MedAug: Contrastive learning leveraging patient metadata improves

representations for chest x-ray interpretation. In Machine Learning for Healthcare Con-

ference, 2021.

Linda Wang and Alexander Wong. COVID-Net: A tailored deep convolutional neural

network design for detection of COVID-19 cases from chest X-ray images. arXiv preprint

arXiv:2003.09871, 2020.

Xiaosong Wang, Yifan Peng, Le Lu, Zhiyong Lu, Mohammadhadi Bagheri, and Ronald M

Summers. ChestX-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-

supervised classification and localization of common thorax diseases. In Proceedings of

the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

Xiaosong Wang, Yifan Peng, Le Lu, Zhiyong Lu, and Ronald M Summers. TieNet: Text-

image embedding network for common thorax disease classification and reporting in chest

X-rays. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recog-

nition (CVPR), 2018.

Xiaosong Wang, Ziyue Xu, Leo Tam, Dong Yang, and Daguang Xu. Self-supervised image-

text pre-training with mixed data in chest x-rays. arXiv preprint arXiv:2103.16022, 2021.

Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, An-

thony Moi, Pierric Cistac, Tim Rault, Remi Louf, Morgan Funtowicz, Joe Davison,

Sam Shleifer, Patrick von Platen, Clara Ma, Yacine Jernite, Julien Plu, Canwen Xu,

Teven Le Scao, Sylvain Gugger, Mariama Drame, Quentin Lhoest, and Alexander Rush.

Transformers: State-of-the-art natural language processing. In Proceedings of the 2020

Conference on Empirical Methods in Natural Language Processing (EMNLP): System

Demonstrations, 2020.

Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhudinov,

Rich Zemel, and Yoshua Bengio. Show, attend and tell: Neural image caption generation

with visual attention. In International Conference on Machine Learning (ICML), 2015.

Chengxi Zang and Fei Wang. Scehr: Supervised contrastive learning for clinical risk pre-

diction using electronic health records. arXiv preprint arXiv:2110.04943, 2021.

20

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Appendix A. Model Implementation and Pretraining Details

Dataset Preprocessing. For the MIMIC-CXR chest radiograph dataset, we use the

publicly available JPG version of it.

2

For both the MIMIC-CXR chest dataset and the

Rhode Island Hospital bone image datasets, we resize the image files to have a size of 256

on the larger side. For the textual radiology report data, we first tokenize all reports with

the default English tokenizer in version 4.0.0 of the CoreNLP library (Manning et al., 2014).

Next, we keep only the Findings and Impression sections and remove all other sections. We

remove all image-text pairings from the dataset where the text section is empty or has less

than 3 tokens. This preprocessing procedure gives us about 217k total image-text pairs

for pretraining our chest image encoder and 48k total pairs for pretraining our bone image

encoder.

Image and Text Encoders. For the image encoder, we use the standard ResNet50

implementation provided by the torchvision library. For the text encoder, we use the BERT

base encoder offered by the Transformers library (Wolf et al., 2020) and initialize it with

the ClinicalBERT model (Alsentzer et al., 2019) pretrained on the MIMIC clinical notes.

We also experimented with training a specialized BERT encoder on a large collection of

radiology notes but found that it made no substantial difference in the pretraining results.

At pretraining time we freeze the embeddings and the first 6 layers of this BERT encoder,

and only fine-tune the last 6 layers for our contrastive task.

Other Hyperparameters. For contrastive learning, we use projection layers with an

output dimension d = 512, a temperature value τ = 0.1, a loss weight λ = 0.75. These

hyperparameter settings are obtained by comparing the linear evaluation validation scores

on the RSNA image classification task with the pretrained ResNet50 weights. For the

image transformation family T , we adopt the implementations offered by the torchvision

library.

3

We apply random cropping with a ratio sampled from [0.6, 1.0]; horizontal flipping

with p = 0.5; affine transformation with a degree sampled from [−20, 20], max horizontal

and vertical translation fractions of 0.1, and a scaling factor sampled from [0.95, 1.05];

color jittering with brightness and contrast adjustment ratios sampled from [0.6, 1.4]; and

Gaussian blur with σ ∈ [0.1, 3.0]. All images are resized to 224×224 after the transformation

t

v

is applied. Limited by computational resources, we arrive at these image transformation

parameters via preliminary experiments rather than a systematic search.

Pretraining Details. At pretraining time, for each dataset, we randomly sample 5k

image-text pairs to form a held-out validation set. We we use the Adam optimizer (Kingma

and Ba, 2015) with an initial learning rate of 1e-4 and weight decay of 1e-6. We initialize the

image encoder with ImageNet pretrained weights at the beginning of pretraining, and use a

fixed batch size of 32. We calculate the validation loss every 5000 steps, and if the validation

loss does not decrease after 5 straight evaluation runs, we anneal the learning rate by a factor

of 0.5. We stop pretraining after 200 evaluation runs, and save the model checkpoint that

achieves the lowest validation loss. For efficiency, we employ mixed-precision training, and

for reference, the whole pretraining run on the MIMIC-CXR dataset took about 3 days on

a single Titan RTX GPU card.

2. https://physionet.org/content/mimic-cxr-jpg/2.0.0/

3. https://github.com/pytorch/vision

21

Contrastive Learning of Medical Visual Representations from Paired Images and Text

Appendix B. Image Classification Experiments

We prepared and used the 4 image classification datasets following the procedures below:

1. RSNA Pneumonia Detection (Wang et al., 2017; Shih et al., 2019): we used the orig-

inal version of this dataset available at its Kaggle page,

4

which contains 25184/1500/3000

annotated images in its training/validation/test sets, respectively.

2. CheXpert image classification (Irvin et al., 2019): we downloaded the original version

of this dataset from its official website.

5

Since the original expert-labeled test set of this

dataset is hidden and not included as part of the release, we instead followed Raghu et al.

(2019) and used the original expert-labeled validation set as our test set, and randomly

sampled 5000 images from the original training set for validation purpose. The resulting

dataset contains 218414/5000/234 images in each split.

3. COVIDx image classification (Wang and Wong, 2020): we prepared this dataset fol-

lowing the scripts provided by its authors.

6

We used the version 4 of this dataset, the

latest version at the time of this work. We additionally randomly sampled 300 images

from the training set for validation, resulting in a dataset with 13598/300/300 images in

each split.

4. MURA bony abnormality detection (Rajpurkar et al., 2018a): we downloaded the orig-

inal version of this dataset from its website.

7

Similar to the CheXpert dataset, we again

used the original validation set as our test set, and randomly sampled 10% images from

the training set for validation, resulting in a dataset with 33078/3730/3197 images in

each split. Different from the other 3 datasets, the MURA dataset uses patient-level

evaluation, meaning that the prediction results from different images of the same patient

needs to be aggregated to produce a final prediction for the patient, which is then scored

against the gold patient label. We therefore followed Rajpurkar et al. (2018a) and at

test time aggregated result for a patient by averaging the predicted probabilities from

multiple images.

Classification Model Training Details. For all models that require ImageNet pre-

trained initialization, we use the pretrained weights from torchvision, which achieves an

ImageNet top-5 error rate of 7.13%. For all datasets, we first zero-pad the input image to

be square, and then resize it to be 224×224. For training, we use the Adam optimizer with

an initial learning rate of 1e-3 for the COVIDx task and 1e-4 for the other three tasks. We

additionally apply a weight decay of 1e-6 and a dropout before the last classification layer

with p = 0.2 in all tasks. All classification models are trained with a batch size of 64. In

the fine-tuning evaluation setting, we first “warmup” the classification head by freezing the

CNN weights and only training the classification head with a learning rate of 1e-3 for 200

steps, after which we unfreeze the CNN weights and fine-tune the entire network together.

Validation score is obtained after each epoch of training and we anneal the learning rate

4. https://www.kaggle.com/c/rsna-pneumonia-detection-challenge

5. https://stanfordmlgroup.github.io/competitions/chexpert/

6. https://github.com/lindawangg/COVID-Net

7. https://stanfordmlgroup.github.io/competitions/mura/

22

Contrastive Learning of Medical Visual Representations from Paired Images and Text