ROBBIE LOVE

The Spoken British National Corpus 2014

Design, compilation and analysis

ESRC Centre for Corpus Approaches to Social Science

Department of Linguistics and English Language

Lancaster University

A thesis submitted to Lancaster University

for the degree of Doctor of Philosophy in Linguistics

September 2017

i

Contents

Contents ............................................................................................................. i

Abstract ............................................................................................................. v

Acknowledgements ......................................................................................... vi

List of tables .................................................................................................. viii

List of figures .................................................................................................... x

1 Introduction ............................................................................................... 1

1.1 Overview .................................................................................................................................... 1

1.2 Research aims & structure ....................................................................................................... 3

2 Literature review ........................................................................................ 6

2.1 Introduction ............................................................................................................................... 6

2.2 The Spoken British National Corpus 1994 & other spoken corpora ............................... 7

2.2.1 The Spoken BNC1994 ...................................................................................................... 7

2.2.2 Other British English corpora containing spoken data ................................................ 9

2.2.3 Summary and justification for the Spoken BNC2014 ................................................ 11

2.3 Review of research based on spoken English corpora ...................................................... 12

2.3.1 Introduction ...................................................................................................................... 12

2.3.2 Corpus linguistics journals .............................................................................................. 13

2.3.3 Grammars ......................................................................................................................... 18

2.4 Summary .................................................................................................................................. 20

3 Corpus design .......................................................................................... 22

3.1 Introduction ............................................................................................................................. 22

3.2 Design & speaker recruitment .............................................................................................. 22

3.2.1 ‘Demographic’ corpus data ............................................................................................. 22

3.2.2 Design of the Spoken BNC1994DS ............................................................................. 23

3.2.3 Speaker recruitment in the Spoken BNC1994 ............................................................. 27

ii

3.2.4 Design & recruitment in other spoken corpora .......................................................... 29

3.2.5 General approach to design in the Spoken BNC2014 ............................................... 31

3.2.6 Speaker recruitment in the Spoken BNC2014 ............................................................. 33

3.3 Speaker and text metadata ..................................................................................................... 37

3.3.1 Introduction ...................................................................................................................... 37

3.3.2 Metadata in the Spoken BNC1994 ................................................................................ 38

3.3.3 Metadata collection in the Spoken BNC2014: procedure and ethics ....................... 41

3.3.4 Speaker & text metadata collection procedures in the Spoken BNC2014 .............. 46

3.3.5 Age, linguistic region & socio-economic status: discussion ...................................... 48

3.4 Collection of audio data ......................................................................................................... 70

3.4.1 Introduction ...................................................................................................................... 70

3.4.2 Audio recording in the Spoken BNC1994 ................................................................... 70

3.4.3 Audio recording in the Spoken BNC2014 ................................................................... 70

3.5 Chapter summary .................................................................................................................... 76

4 Transcription ........................................................................................... 77

4.1 Introduction ............................................................................................................................. 77

4.2 Transcription: human vs. machine ....................................................................................... 77

4.3 Approach to human transcription ........................................................................................ 83

4.4 The Spoken BNC1994 transcription scheme ..................................................................... 87

4.5 The Spoken BNC2014 transcription scheme: main features ........................................... 88

4.6 The Spoken BNC2014 transcription process ..................................................................... 98

4.7 Chapter summary .................................................................................................................. 100

5 Speaker identification ............................................................................. 102

5.1 Introduction ........................................................................................................................... 102

5.2 Speaker identification ........................................................................................................... 102

5.3 Pilot study .............................................................................................................................. 106

5.3.1 Pilot study (A): Certainty (pilot study recordings) .................................................... 106

iii

5.3.2 Pilot study (B): Certainty (Spoken BNC2014 recording) ......................................... 107

5.3.3 Pilot study (C): Inter-rater agreement (Spoken BNC2014 recording) ................... 107

5.4 Research Questions .............................................................................................................. 108

5.5 Methodological approach .................................................................................................... 109

5.5.1 Introduction .................................................................................................................... 109

5.5.2 Main study (A): a Spoken BNC2014 recording ......................................................... 110

5.5.3 Main study (B): the gold standard recording.............................................................. 111

5.6 Results .................................................................................................................................... 113

5.6.1 Main study (A1): Certainty in a Spoken BNC2014 recording ................................. 113

5.6.2 Main study (A2): Inter-rater agreement in a Spoken BNC2014 recording............ 114

5.6.3 Main study (B1): Certainty in the gold standard recording ...................................... 115

5.6.4 Main study (B2): Inter-rater agreement in the gold standard recording ................ 116

5.6.5 Main study (B3): Accuracy in the gold standard recording ...................................... 118

5.7 Discussion: what does this mean for the Spoken BNC2014? ........................................ 119

5.7.1 Individual transcriber variation .................................................................................... 120

5.7.2 Automated speaker identification ................................................................................ 120

5.7.3 The use of phonetic expertise ...................................................................................... 122

5.8 Affected texts and solutions implemented ........................................................................ 123

5.9 Chapter summary .................................................................................................................. 125

6 Corpus processing and dissemination ................................................... 128

6.1 Introduction ........................................................................................................................... 128

6.2 XML conversion ................................................................................................................... 128

6.3 Annotation ............................................................................................................................. 133

6.4 Corpus dissemination ........................................................................................................... 136

6.5 Chapter summary .................................................................................................................. 141

7 Analysing the Spoken BNC2014 ............................................................. 142

7.1 Introduction ........................................................................................................................... 142

iv

7.2 Swearing in linguistics .......................................................................................................... 143

7.2.1 Swearing as an object of study ..................................................................................... 143

7.2.2 Defining swearing .......................................................................................................... 144

7.2.3 McEnery’s approach to bad language ......................................................................... 146

7.3 Method ................................................................................................................................... 148

7.3.1 Methodological procedure ............................................................................................ 148

7.3.2 Data .................................................................................................................................. 151

7.4 Results .................................................................................................................................... 154

7.4.1 Frequency comparison .................................................................................................. 154

7.4.2 Strength ........................................................................................................................... 155

7.4.3 Change and stability in frequency ................................................................................ 161

7.4.4 High-frequency BLWs: sociolinguistic distribution .................................................. 165

7.4.5 Sociolinguistic distribution ........................................................................................... 166

7.4.6 Case study: linguistic annotation of FUCK ............................................................... 173

7.5 Chapter summary .................................................................................................................. 178

8 Conclusion .............................................................................................. 181

8.1 Overview of the thesis ......................................................................................................... 181

8.2 Successes, limitations & recommended future work ....................................................... 185

8.3 Summary ................................................................................................................................ 188

References ..................................................................................................... 189

List of Appendices ........................................................................................ 207

Appendices ................................................................................................... 208

v

Abstract

The ESRC-funded Centre for Corpus Approaches to Social Science at Lancaster

University (CASS) and the English Language Teaching group at Cambridge University Press

(CUP) have compiled a new, publicly-accessible corpus of spoken British English from the

2010s, known as the Spoken British National Corpus 2014 (Spoken BNC2014). The 11.5

million-word corpus, gathered solely in informal contexts, is the first freely-accessible corpus of

its kind since the spoken component of the original British National Corpus (the Spoken

BNC1994), which, despite its age, is still used as a proxy for present-day English in research

today.

This thesis presents a detailed account of each stage of the Spoken BNC2014’s

construction, including its conception, design, transcription, processing and dissemination. It

also demonstrates the research potential of the corpus, by presenting a diachronic analysis of

‘bad language’ in spoken British English, comparing the 1990s to the 2010s. The thesis shows

how the research team struck a delicate balance between backwards compatibility with the

Spoken BNC1994 and optimal practice in the context of compiling a new corpus. Although

comparable with its predecessor, the Spoken BNC2014 is shown to represent innovation in

approaches to the compilation of spoken corpora.

This thesis makes several useful contributions to the linguistic research community. The

Spoken BNC2014 itself should be of use to many researchers, educators and students in the

corpus linguistics and English language communities and beyond. In addition, the thesis

represents an example of good practice with regards to academic collaboration with a

commercial stakeholder. Thirdly, although not a ‘user guide’, the methodological discussions and

analysis presented in this thesis are intended to help the Spoken BNC2014 to be as useful to as

many people, and for as many purposes, as possible.

vi

Acknowledgements

This work would not have been possible without the help, support and generosity of

many people around me. First and foremost, I have been very lucky to have had as my

supervisor Tony McEnery. He pushed me to break through the many mental barriers I inflicted

upon myself, and I will take with me many of the wisdoms he has imparted on me. I must also

single out Andrew Hardie, who in many ways was my unofficial second supervisor. I am grateful

for all the support and guidance he has given me throughout my studies. Together, it was Tony

and Andrew who recruited me to work on this project, and I am extremely grateful for this and

all other opportunities they have afforded me.

I am also very grateful to the rest of the Spoken BNC2014 research team. Claire Dembry

at Cambridge University Press has been a brilliant colleague and friend, providing inspiration and

support throughout the project. Her team at CUP, including Olivia Goodman, Imogen Dickens,

Sarah Grieves and Laura Grimes, did much of the front-line work on the project and I am truly

grateful for their cooperation and expertise. Vaclav Brezina at Lancaster University has also

helped me greatly over the last four years as a collaborator on this and several other projects.

I have many reasons to thank both the ESRC-funded Centre for Corpus Approaches to

Social Science (CASS) and the Department of Linguistics and English Language (LAEL) at

Lancaster University. Many at CASS have had a hand in my professional and personal

development during the course of this work, including: Jennifer Hughes, Amelia Joulain-Jay,

Amanda Potts, Mark McGlashan, Ruth Avon, Sheryl Prentice and Paul Baker. I am grateful to

many of the academic staff at LAEL, including: Jonathan Culpeper, who as my confirmation

panellist provided very useful feedback; Sam Kirkham, who provided insights from phonetics so

readily; and the department’s administrative staff, who provided excellent support. My thanks

also extend to Matty Robinson and Gavin Brookes, who proofread much of this thesis.

As I approached the final months of writing up this thesis, I moved to Cambridge to

start working at Cambridge Assessment English. I am grateful to colleagues there who supported

me while I balanced my new role with my remaining PhD work.

One of my favourite hobbies is performing at the theatre. I am grateful to the members

of several theatre groups – Lancaster University Theatre Group, Lancaster Amateur Dramatic

and Operatic Society, About Theatre, the Morecambe Warblers and, most recent, Cambridge

Operatic Society – who welcomed me and allowed me to blow off steam in some glitzy musical.

vii

I am also indebted to friends who have shown an interest in my work, argued with me

about language, and most importantly motivated me to keep going – thank you most of all to

Matthew Bosley, Gavin Brookes, Mathew Gillings and Niall Curry.

Finally, I must thank my parents – Janet and Geoff – for providing love and support

during difficult periods of this work, and always being there to listen. I am also grateful to the

rest of my family for their enduring enthusiasm, interest and pride in my work. This thesis is

dedicated to my family, with love.

Robbie Love

Cambridge, UK

September 2017

viii

List of tables

Table 1. Proportion of selected articles relative to the total number of articles published by each journal between 1994 and 2016. .................................. 14

Table 2. Proportions of words in Spoken BNC1994 assigned across each of the three selection criteria (adapted from Burnard 2000: 13). .................... 25

Table 3. Approach to corpus design taken by the compilers of a variety of spoken corpora. .................................................................................................... 30

Table 4. Number of words categorised as ‘unknown’ or ‘info missing’ for the three main demographic categories in the Spoken BNC1994DS and the

Spoken BNC2014. .................................................................................................................................................................................................................................. 43

Table 5. National Readership Survey Social Grade classifications (NRS 2014). ........................................................................................................................... 63

Table 6. Social Class based on Occupation (SC) (Stuchbury 2013b). ............................................................................................................................................. 64

Table 7. Socio-economic group (SEG) (Stuchbury 2013a). ............................................................................................................................................................. 65

Table 8. The nine major analytic classes of the NS-SEC (ONS 2010c). ........................................................................................................................................ 66

Table 9. Mapping between the NS-SEC and Social Grade assumed for Spoken BNC2014 speaker metadata. ...................................................................... 69

Table 10. List of permissible filled pauses in the Spoken BNC2014 transcription scheme. ....................................................................................................... 95

Table 11. Speaker metadata for the gold standard recording. ........................................................................................................................................................ 112

Table 12. Distribution of code types in the Spoken BNC2014 transcripts. ................................................................................................................................. 114

Table 13. Total distribution of code types for the eight Spoken BNC2014 transcripts. ............................................................................................................ 115

Table 14. Distribution of code types in the gold standard transcripts. ......................................................................................................................................... 116

Table 15. Total distribution of code types for the eight gold standard test transcripts. ............................................................................................................. 117

Table 16. Categories of speaker ID code for which agreement between the gold standard and test transcripts could occur. ............................................ 118

Table 17. Inter-rater agreement (i.e. accuracy) of speaker identification between the test transcripts and the gold standard transcript. .......................... 119

Table 18. Accuracy of the phonetician’s transcript compared to the gold standard test transcript extracts. .......................................................................... 123

Table 19. Frequency of corpus texts per number of speakers per recording in the Spoken BNC2014. ................................................................................. 124

ix

Table 20. LCA annotation scheme (McEnery 2005: 27); modifications are italicised. ............................................................................................................... 147

Table 21. Categories of insult in the LCA annotation scheme (McEnery 2005: 27). ................................................................................................................. 147

Table 22. Token counts for the groups within demographic categories gender, age and socio-economic status in the Spoken BNC1994DS and Spoken

BNC2014S. ............................................................................................................................................................................................................................................ 152

Table 23. A scale of offence (McEnery 2005: 30). ........................................................................................................................................................................... 156

Table 24. Scale of offence for bad language (Ofcom 2016: 3). ...................................................................................................................................................... 157

Table 25. BLWs in the Ofcom (2016) report, which are given unambiguous ratings in the scale of offence. ....................................................................... 157

Table 26. BLWs which have decreased in use significantly (p<0.0001) between the 1990s and 2010s, ranked by effect size. ........................................... 163

Table 27. BLWs which have increased in use significantly (p<0.0001) between the 1990s and 2010s, ranked by effect size. ............................................ 164

Table 28. BLWs which have not changed in frequency significantly (p>0.05) between the 1990s and 2010s, and which have a minimum raw frequency

of 100 in both corpora, ranked alphabetically. ................................................................................................................................................................................. 165

Table 29. Annotation of FUCK in the Spoken BNC1994DS and the Spoken BNC2014S, using the bad language categorization scheme. ................... 175

Table 30. Information about the recordings used in the speaker identification investigations. ................................................................................................ 258

x

List of figures

Figure 1. Comparison of gender distribution between the Spoken BNC1994 speakers (Burnard 2000: 13) and the UK (England, Wales and Scotland)

population of “present residents: in households” in 1991 (NOMIS wizard query)...................................................................................................................... 26

Figure 2. Comparison of age distribution between the Spoken BNC1994DS speakers (Burnard 2000: 13) and the UK (England and Wales only)

population in 1991 (NOMIS wizard query). ....................................................................................................................................................................................... 27

Figure 3. The Spoken BNC1994DS’s geographic regions (reproduced from Crowdy 1993: 260). ............................................................................................ 28

Figure 4. Example of an online news article reporting on early findings from the Spoken BNC2014. .................................................................................... 34

Figure 5. The relationship between researcher, contributor and speaker when compiling a spoken corpus (double-headed arrows indicate contact

between the linked parties; dotted lines indicate that n is a theoretically unknown number that is only known in practice). ............................................... 36

Figure 6. Example of conversation log given to contributors in the Spoken BNC1994DS (reproduced from Crowdy 1993: 260). ................................... 40

Figure 7. The Speaker Information Sheet/consent form used in the Spoken BNC2014. ........................................................................................................... 42

Figure 8. Recording Information Sheet used in the Spoken BNC2014. ........................................................................................................................................ 45

Figure 9. Dialect categories used in the Spoken BNC1994DS. ....................................................................................................................................................... 56

Figure 10. Birthplace and dialect categories used in the Spoken BNC2014. ................................................................................................................................. 61

Figure 11. Guidelines for Data Collection document which was provided to Spoken BNC2014 contributors. ..................................................................... 75

Figure 12. Concordance plot of ‘[quot=’ tags in the pilot corpus (displayed in AntConc, Anthony 2014). ............................................................................. 98

Figure 13. Proportion of indeterminable speaker identification in the pilot study corpus according to the number of speakers per recording. ............. 107

Figure 14. Transcript excerpt, pre- and post-XML conversion. .................................................................................................................................................... 130

Figure 15. Vocalisation checking functions in the XML conversion PHP script. ...................................................................................................................... 131

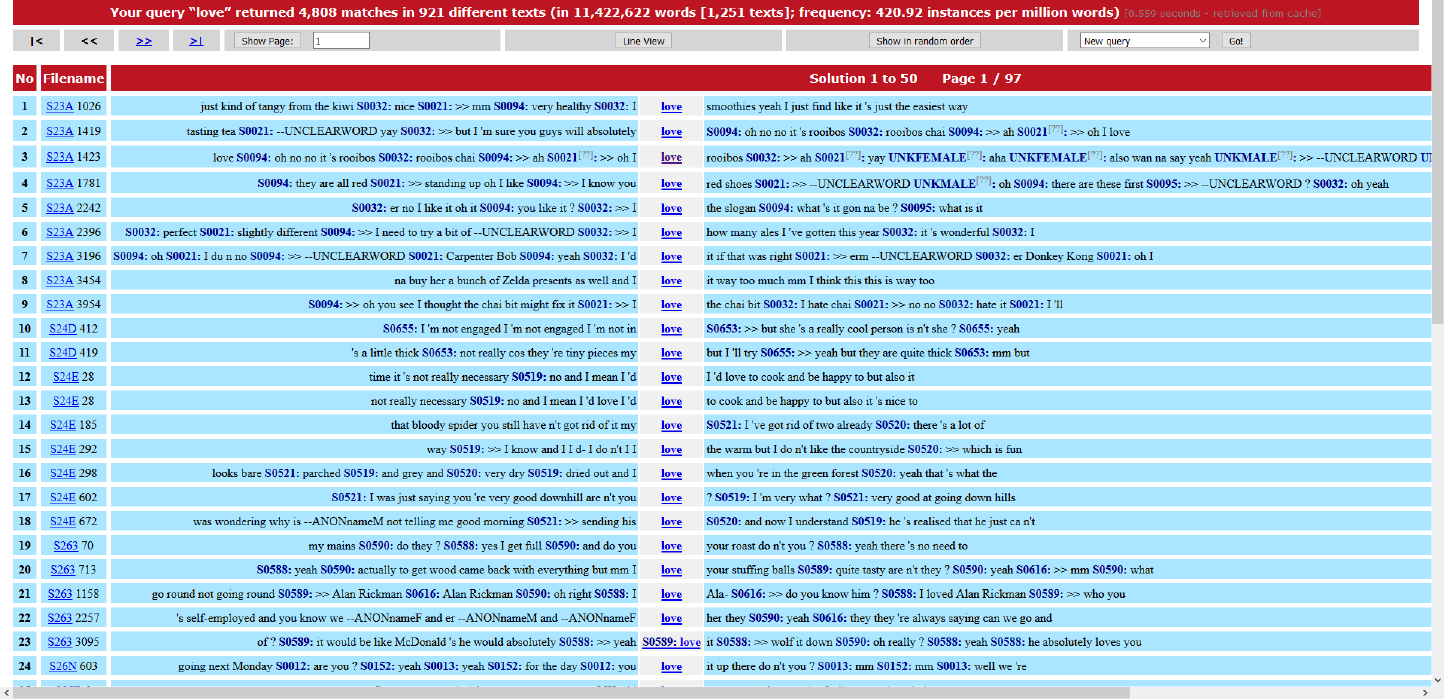

Figure 16. Standard query menu for the Spoken BNC2014 in CQPweb (Hardie 2012). .......................................................................................................... 137

Figure 17. Concordance lines for the simple query ‘love’ in the Spoken BNC2014. ................................................................................................................. 138

xi

Figure 18. Relative frequency comparison of BLWs categorised according to the Ofcom (2016) scale of offence. ............................................................ 158

Figure 19. Gender and socio-economic status. ................................................................................................................................................................................ 160

Figure 20. Gender and age. .................................................................................................................................................................................................................. 161

Figure 21. Age and socio-economic status. ....................................................................................................................................................................................... 161

Figure 22. Distribution of relative frequencies for the 12 BLWs by gender in the Spoken BNC1994DS and Spoken BNC2014S. .................................. 167

Figure 23. Distribution of relative frequencies for the 12 BLWs by age in the Spoken BNC1994DS and Spoken BNC2014S. ........................................ 169

Figure 24. Distribution of relative frequencies for the 12 BLWs by socio-economic status in the Spoken BNC1994DS and Spoken BNC2014S. ....... 171

Figure 25. Distribution of the 12 BLWs in the Spoken BNC2014S according to NS-SEC. ..................................................................................................... 172

Figure 26. Separating the turns from their corresponding speaker ID codes (invented data). ................................................................................................. 251

Figure 27. Viewing each transcript of the same recording alongside each other (invented data). ............................................................................................ 251

Figure 28. The speaker ID codes from each transcript after their corresponding turns have been removed (invented data). ............................................ 252

Figure 29. Unaligned extract from original transcript and test transcript #1. ............................................................................................................................. 253

Figure 30. Aligned extract from original transcript and test transcript #1. ................................................................................................................................. 253

Figure 31. Unaligned extract from original transcript and test transcript #8. ............................................................................................................................. 254

Figure 32. Aligned extract from original transcript and test transcript #8. ................................................................................................................................. 254

Figure 33. Unaligned extract from original transcript and test transcript #1. ............................................................................................................................. 254

Figure 34. Aligned extract from original transcript and test transcript #1. ................................................................................................................................. 255

Figure 35. Aligned extract from original transcript and test transcript #6. ................................................................................................................................. 256

Figure 36. Unaligned extract from original transcript and test transcript #6. ............................................................................................................................. 256

Figure 37. Aligned extract from original transcript and test transcript #6. ................................................................................................................................. 257

1

1 Introduction

1.1 Overview

The ESRC-funded Centre for Corpus Approaches to Social Science (CASS)

1

at Lancaster

University and the English Language Teaching group at Cambridge University Press (CUP) have

compiled a new, publicly-accessible corpus of present-day spoken British English, gathered in

informal contexts, known as the Spoken British National Corpus 2014 (Spoken BNC2014). This

is the first publicly-accessible corpus of its kind since the spoken component of the original

British National Corpus, which was completed in 1994, and which, despite its age, is still used as

a proxy for present-day English in research today (e.g. Hadikin 2014; Rühlemann & Gries 2015).

The new corpus contains data gathered in the years 2012 to 2016. As of September 2017 it is

available publicly via Lancaster University’s CQPweb server (Hardie 2012), with the underlying

XML files downloadable from late 2018. It will subsequently form the spoken component of the

larger British National Corpus 2014, the written component of which is also under development.

The Spoken BNC2014 contains 11,422,617 million words of transcribed content, featuring 668

speakers in 1,251 recordings.

The BNC2014’s predecessor, the British National Corpus (henceforth BNC1994), is one

of the most widely known and used corpora. No orthographically transcribed spoken corpus

compiled since the release of 10-million-word spoken component of the BNC (henceforth

Spoken BNC1994) has matched it in either its size or availability. Unsurprisingly, the corpus

linguistics community has, for some time, used the Spoken BNC1994 as a proxy for ‘present-

day’ spoken British English. That this ‘go-to’ dataset is over twenty years old at the time of

writing is a problem for current and future research that needed to be addressed with increasing

urgency.

The collaboration between CASS and CUP to build the Spoken BNC2014 came about

after some years of both centres working individually on the idea of addressing this situation by

compiling a new corpus of spoken British English which could, in some way, match up to the

Spoken BNC1994.

Claire Dembry at CUP had collected two million words of new spoken data

1

The research presented in this thesis was supported by the ESRC Centre for Corpus Approaches to Social Science,

ESRC grant reference ES/K002155/1.

2

for the Cambridge English Corpus

2

in 2012, trialling the public participation method which was

used, along with the data itself, in the Spoken BNC2014 (see Section 3.2.5, p. 31). Meanwhile,

Tony McEnery and Andrew Hardie at Lancaster had been planning to compile a new version of

the British National Corpus and, by 2013, had recruited (a) me, to start investigating

methodological issues in compiling spoken corpora, and (b) Vaclav Brezina, to bring insights to

the project based on his use of the Spoken BNC1994 to explore sociolinguistic research

questions. Early in 2014, both CASS and CUP agreed, upon learning of each other’s work, to

pool resources and work together to build the ‘Lancaster/Cambridge Corpus of Speech’ (LCCS)

which, within a few months and with the blessing of Martin Wynne at the University of Oxford,

was renamed the Spoken British National Corpus 2014 (Spoken BNC2014). The Spoken

BNC2014 will become the spoken subcorpus of the planned British National Corpus 2014 – the

written component is being compiled by Abi Hawtin with the support of CASS and CUP, and is

due for release in 2018.

The aim of this thesis is to present an account of the design, compilation and analysis of

the Spoken BNC2014, making clear the most important decisions the research team made as we

collected, transcribed and processed the data, as well as to demonstrate the research potential of

the corpus. The underlying theme of this thesis is the maximisation of the efficiency of spoken

corpus creation in view of practical constraints, with a focus on principles of design as well as

data and metadata collection, transcription and processing. As is not unusual in corpus

construction, compromises had to be made throughout the compilation of this corpus; these are

laid out transparently. Furthermore, this thesis describes the innovative aspects of the Spoken

BNC2014 project – notably including the use of PPSR (public participation in scientific research,

Shirk et al. 2012), the introduction of new speaker metadata categorisation schemes, and

consideration of the difficulty of speaker identification at the transcription stage – among others.

While the thesis does not function as a Spoken BNC2014 ‘user guide’,

3

it is a thorough account

of the careful decisions that were made at each stage of development, and should be read by

users of the corpus.

4

The compilation of the Spoken BNC2014 was, as stated, a collaborative research project

undertaken by CASS and CUP. It was a group effort, and, in addition to my own work, this

thesis accounts for decisions made and work completed in collaboration with a team of

researchers of which I was a member. Other members of the main research team – those who,

2

http://www.cambridge.org/us/cambridgeenglish/better-learning/deeper-insights/linguistics-

pedagogy/cambridge-english-corpus (last accessed September 2017).

3

See Love et al. (2017b) for the BNC2014 user manual and reference guide.

4

Several of the major themes of the thesis are captured in the Spoken BNC2014 citation paper (Love et al. 2017a),

which serves as a summary of the project.

3

aside from myself, made decisions which shaped the course of the compilation process – were

Vaclav Brezina, Andrew Hardie and Tony McEnery (from Lancaster), and Claire Dembry and

Laura Grimes (from Cambridge). In this thesis, I use singular and plural pronouns systematically:

first person singular pronouns are used when discussing work which was conducted solely by

me, while first person plural pronouns and third person reference to “the Spoken BNC2014

research team” are used when reporting on decisions I made with the research team.

1.2 Research aims & structure

The aims of the Spoken BNC2014 project are:

(1) to compile a corpus of informal British English conversation from the 2010s which is

comparable to the Spoken BNC1994’s demographic component;

(2) to compile the corpus in a manner which reflects, as much as possible, the state of the art

with regards to methodological approach; and, in achieving steps (2) and (3);

(3) to provide a fresh data source for a new series of wide-ranging studies in linguistics and

the social sciences.

The structure of this thesis is perhaps different to most, as its focus is expressly methodological

– it comprises a series of methodological explorations, followed by one chapter which contains

linguistic analysis. Because of this, the standard approach to a thesis, where one reviews all

relevant literature at the beginning and subsequently outlines a methodological approach that

accounts for the entire project, does not fit my purpose. Accordingly, I decided to adopt a

thematic approach, addressing each stage of the compilation of the corpus in the chronological

order in which they occurred. Following a general and over-arching Literature Review (Chapter

2), which reviews the use of the Spoken BNC1994 and other relevant corpora for linguistic

research, the thesis is divided into the following chapters:

• Chapter 3: Corpus design

This chapter covers general principles of spoken corpus design including recruitment, metadata

and audio data. A major theme of this chapter is the extent to which the Spoken BNC1994 and

other relevant corpora have been compiled using a principled as opposed to opportunistic

approach, and our decision to embrace opportunism (supplemented by targeted interventions) in

the compilation of the Spoken BNC2014.

4

• Chapter 4: Transcription

This chapter discusses the development of a bespoke transcription scheme for the Spoken

BNC2014. It justifies the rejection of automated transcription before describing how the Spoken

BNC2014 transcription scheme elaborates and improves upon that of its predecessor. It also

demonstrates the interactivity between stages of corpus compilation; the transcription scheme

was designed to be mapped automatically and unambiguously into XML at a later stage in the

construction of the corpus.

• Chapter 5: Speaker identification

This chapter reflects upon the transcription stage of the project, investigating the accuracy with

which transcribers were able to assign speaker ID codes to the utterances transcribed in the

Spoken BNC2014 – i.e. ‘speaker identification’. Its aim is to draw attention to the difficulty of

this task for recordings which contain several speakers, and to propose ways in which users can

avoid having potentially inaccurately assigned speaker ID codes affect their research.

• Chapter 6: Corpus processing and dissemination

This chapter discusses the final stages of the compilation of the Spoken BNC2014, describing

the conversion of transcripts into XML; the annotation of the corpus texts for part-of-speech,

lemma and semantic category; and the public dissemination of the corpus.

• Chapter 7: Analysing the Spoken BNC2014

This chapter aims to demonstrate the research potential of the Spoken BNC2014 by comparing

(a sample of) it to the Spoken BNC1994 in a study of bad language. This study aims to reveal

indications that the frequency, strength and social distribution of bad language may have

changed or remained stable between the 1990s and 2010s in spoken British English. Adopting

the approach to bad language proposed by McEnery (2005), I analyse a large set of bad language

words (BLWs) and demonstrate the comparability of the Spoken BNC2014 with its predecessor.

Finally, Chapter 8 (the conclusion) summarises the thesis and discusses the major successes and

limitations of my work on the project, before suggesting future work which could extend the

research capability of the corpus.

Before discussing how the Spoken BNC2014 was built, I will contextualise the use and

popularity of the original British National Corpus, and argue how no corpus since its spoken

5

component has matched the Spoken BNC1994 in terms of several key strengths which appear to

have made it as widely used as it has been. This is the aim of the next chapter.

6

2 Literature review

2.1 Introduction

This Literature Review aims to contextualise the situation which has arisen whereby the

collection of a second Spoken British National Corpus is necessary. It introduces the Spoken

British National Corpus 1994 and discusses its uses in the field of linguistics. It also presents the

case for compiling a second edition now. What it does not do is discuss existing corpora in terms

of design, data collection, transcription or any other feature of corpus construction which has

informed the methods for the compilation of the Spoken BNC2014 – relevant literature on these

topics will be introduced, where appropriate, in each of the methodological chapters which

follow the Literature Review.

Corpus linguistics is “a relatively new approach in linguistics that has to do with the

empirical study of ‘real life’ language use with the help of computers and electronic corpora”

(Lüdeling & Kytö 2008: v). A well-known problem afflicting corpus linguistics as a field is its

tendency to prioritise written forms of language over spoken forms, in consequence of the

drastically greater difficulty, high cost and slower speed of collecting spoken text:

A rough guess suggests that the cost of collecting and transcribing in electronic form one

million words of naturally occurring speech is at least 10 times higher than the cost of

adding another million words of newspaper text. (Burnard 2002: 6)

Contemporary online access to newspaper material means that this disparity is likely to be even

greater today than in 2002. The resulting bias in corpus linguistics towards a “very much written-

biased view” (Lüdeling & Kytö 2008: vi) of language is problematic if one takes the view that

speech is the primary medium of communication (Čermák 2009: 113), containing linguistic

variables that are important for the accurate description of language, and yet inaccessible through

the analysis of corpora composed solely of written texts (Adolphs & Carter 2013: 1). Projects

devoted to the compilation of spoken corpora are thus relatively few and far between (Adolphs

& Carter 2013: 1).

In the next section I introduce one of the few widely accessible spoken corpora of British

English, the spoken section of the British National Corpus (henceforth the Spoken BNC1994).

7

This is, to this day, heavily relied upon in spoken English corpus research. I show that this is due

to the scarcity and lack of accessibility of spoken English corpora that have been developed

since; the Spoken BNC1994 is still relied upon today as the best available corpus of its kind,

despite its age. This, as I will show in Section 2.2, is a problem for current and future research,

and, along with the other problems outlined, it is presented as the main justification for

producing a new spoken corpus – the Spoken BNC2014. I then consider the types of research

for which the Spoken BNC2014 will likely be used by summarising the most prominent areas of

spoken corpus research that have arisen over the last two decades (Section 2.3). I also show that

there are limitations in such previous research that provide evidence of the problems outlined

above, and that the publicly-accessible Spoken BNC2014 aims to considerably improve the

ability of researchers to study spoken British English. I conclude by outlining the main research

aims of the thesis (Section 2.4).

2.2 The Spoken British National Corpus 1994 & other spoken corpora

2.2.1 The Spoken BNC1994

The compilation of the Spoken BNC2014 is informed largely by the BNC1994’s spoken

component (see Crowdy 1993, 1994, 1995), which is “one of the biggest available corpora of

spoken British English” (Nesselhauf & Römer 2007: 297). The goal of the BNC1994’s creators

was “to make it possible to say something about language in general” (Nesselhauf & Römer

2007: 5). Thus its spoken component was designed to function as a representative sample of

spoken British English (Burnard 2007). It was created between 1991 and 1994, and was designed

in two parts: the demographically-sampled part (c. 40%) and the context-governed part (c. 60%)

(Aston & Burnard 1998).

The demographically-sampled part

5

– henceforth the Spoken BNC1994DS – contains

informal, “everyday spontaneous interactions” (Leech et al. 2001: 2). Its contributors (the

volunteers who made the recordings of their interactions with other speakers) were “selected by

age group, sex, social class and geographic region” (Aston & Burnard 1998: 31). 124 adult

contributors made recordings using portable tape recorders (Aston & Burnard 1998: 32), and in

most cases only the contributor was aware of the recording taking place. Contributors wore the

recorders at all times and were instructed to record all of their interactions within a period of

between two and seven days. The Spoken BNC1994DS also incorporates the Bergen Corpus of

London Teenage Language (COLT), a half-million-word sample of spontaneous conversations

among teenagers between the ages of 13-17, collected in a variety of boroughs and school

5

Also known as the ‘conversational part’ (Leech et al. 2001: 2).

8

districts in London in 1993 (Stenström et al. 2002). In the XML edition of the BNC1994 hosted

by Lancaster University’s CQPweb server (Hardie 2012), the Spoken BNC1994DS contains five

million words of transcribed conversation produced by 1,408 speakers and distributed across 153

texts.

The context-governed part

6

– henceforth the Spoken BNC1994CG – contains formal

encounters from institutional settings, which were “categorised by topic and type of interaction”

(Aston & Burnard 1998: 31). Unlike the Spoken BNC1994DS, the Spoken BNC1994CG’s text

types were “selected according to a priori linguistically motivated categories” (Burnard 2000: 14):

namely educational and informative, business, public or institutional and leisure (Burnard 2000: 15).

Because of the variety of text types and settings involved, the data collection procedure varied;

some conversations were recorded using the same procedure as the DS, while recordings of

some conversations (e.g. broadcast media) already existed. The Spoken BNC1994CG contains

seven million words produced by 3,986 speakers and distributed across 755 texts.

Despite certain weaknesses in design and metadata, which I discuss in Section 3.2.2 (p.

23), the Spoken BNC1994 has proven a highly productive resource for linguistic research over

the last two decades. It has been influential in the areas of grammar (e.g. Rühlemann 2006,

Gabrielatos 2011, Smith 2014), sociolinguistics (e.g. McEnery 2006, Saily 2006, Xiao & Tao

2007), conversation analysis (e.g. Rühlemann & Gries 2015), pragmatics (e.g. Wang 2005,

Cappelle et al. 2015, Hatice 2015), and language teaching (e.g. Alderson 2007, Flowerdew 2009),

among others, which are discussed in further detail in Section 2.3.2. Part of the reason for the

widespread use of the BNC1994 is that it is an open-access corpus; researchers from around the

world can access the corpus at zero cost, either by downloading the full text from the Oxford

Text Archive,

7

or using the online interfaces provided by various institutions including Brigham

Young University (BNC-BYU,

8

Davies 2004) and Lancaster University (BNCweb,

9

Hoffmann et

al. 2008). Yet it is undoubtedly the unique access that the Spoken BNC1994 has provided to

large-scale orthographic transcriptions of spontaneous speech that has been the key to its

success. Such resources are needed by linguists, but are expensive and time consuming to

produce and hence are rarely accessible as openly and easily as is the BNC1994.

6

Also known as the ‘task-oriented part’ (Leech et al. 2001: 2).

7

Accessible at: http://ota.ox.ac.uk/desc/2554 (last accessed September 2017).

8

Accessible at: http://corpus.byu.edu/bnc/ (last accessed September 2017).

9

Accessible at: http://bncweb.lancs.ac.uk/bncwebSignup (last accessed September 2017).

9

2.2.2 Other British English corpora containing spoken data

Other corpora of spoken British English exist which are similarly conversational and

non-specialized in terms of context. Although they have the potential to be just as influential as

the Spoken BNC1994DS, they are much harder to access for several reasons. Some have simply

not been made available to the public for commercial reasons. The Cambridge and Nottingham

Corpus of Discourse in English (CANCODE), for example, forms part of the Cambridge

English Corpus, which is a commercial resource belonging to Cambridge University Press and is

not accessible to the wider research community (Carter 1998: 55). Other corpora are available

only after payment of a license fee, which makes them generally less accessible. For instance,

Collins publishers’ WordBanks Online (Collins 2017) offers paid access to a 57-million-word

subcorpus of the Bank of English

10

(containing data from British English and American English

sources, 61 million words of which is spoken); the charges range, at the time of writing, from a

minimum of £695 up to £3,000 per year of access. Likewise, the British component of the

International Corpus of English (ICE-GB), containing one million words of written and spoken

data from the 1990s (Nelson et al. 2002: 3), costs over £400 for a single, non-student license.

11

Some other corpora are generally available, but sample a more narrowly defined regional

variety of English than ‘British English’. For instance, the Scottish Corpus of Texts and Speech

(SCOTS) (Douglas 2003), while free to use, contains only Scottish English and no other regional

varieties of English from the British Isles. Its spoken section, mostly collected after the year

2000, is over one million words in length and contains a mixture of what could be considered

both conversational and task-oriented data. It serves as an example of a corpus project that aimed to

produce “a publicly available resource, mounted on and searchable via the Internet” (Douglas

2003: 24); the SCOTS website

12

allows users immediate and free access to the corpus. It appears

to be the first project since the Spoken BNC1994 to encourage use of the data not only by

linguists but by researchers from other disciplines too:

It is envisaged that SCOTS will be a useful resource, not only for language researchers,

but also for those working in education, government, the creative arts, media, and

tourism, who have a more general interest in Scottish culture and identity. (Douglas

2003: 24)

10

https://www.collinsdictionary.com/wordbanks (last accessed September 2017).

11

http://www.ucl.ac.uk/english-usage/projects/ice-gb/iceorder2.htm (last accessed September 2017).

12

http://www.scottishcorpus.ac.uk/ (last accessed September 2017).

10

This suggests that some work has taken place since the compilation of the BNC1994 that has, at

least in part, been able to bridge the gap of open-access, spoken, British English corpus data

between the early 1990s and the present-day.

More recently, the British Broadcasting Corporation (BBC) undertook a language

compilation project that rivalled the Spoken BNC1994 both in size and range of speakers – the

BBC Voices project (Robinson 2012). BBC Voices is “the most significant popular survey of

regional English ever undertaken around the UK” (BBC Voices 2007), and was recorded in 2004

and 2005 by fifty BBC radio journalists in locations all over the UK. All together over 1,200

speakers produced a total of 283 recordings. The only public access to the data (hosted by the

British Library’s National Sound Archive)

13

is to the recordings themselves. Users can freely

listen to the BBC Voices recordings online, but no transcripts exist. Since this project seems

similar to the Spoken BNC1994, it could be said that the most convenient way of producing a

successor would simply be to transcribe the BBC Voices recordings and release them as a

corpus. However, there are several differences between the Spoken BNC1994 and the BBC

Voices project which make this solution inadequate. Firstly, the BBC Voices project is a dialect

survey and not a corpus project (Robinson 2012: 23); the aim of its compilers was to search for

varieties of British English that were influenced by the widest range of geographic backgrounds

as possible, including other countries (BBC Voices 2007). Furthermore, the aim of BBC Voices

was not only to record samples of the many regional dialects of spoken English, but also to

capture discourses about language itself. The radio journalists achieved this by gathering the

recordings via informal interviews with groups of friends or colleagues, and asking specific

questions (BBC Voices 2007). This, then, is not naturally occurring language in the same sense as

the Spoken BNC1994DS, and so these conversations are not comparable. Finally, even if a

corpus of BBC Voices transcripts did exist, it would already be a decade old.

In this section I have introduced several spoken corpora of British English which have

been compiled since the release of the Spoken BNC1994, and discussed restrictions with regards

to the accessibility, appropriateness or availability of the data. These restrictions appear to have

translated into a much lower level of research output using these datasets. As a crude proxy for

the academic impact of these corpora, I searched in Lancaster University’s online library system

for publications which mention them. At the time of writing, a search for CANCODE retrieves

54 publications; WordBanks Online only 45; ICE-GB 300; SCOTS 34; and BBC Voices 101. By

contrast searching for the BNC1994 identifies 3,000 publications. While an admittedly rough rule

of thumb, this quick search shows that even though conversational, non-specialized spoken

13

http://sounds.bl.uk/Accents-and-dialects/BBC-Voices (last accessed September 2017).

11

corpora that may be just as useful as the Spoken BNC1994DS have been compiled since 1994,

their limited availability, and/or the expense of accessing them, has meant that the Spoken

BNC1994 remains the most widely used spoken corpus of British English to date.

2.2.3 Summary and justification for the Spoken BNC2014

It is clearly problematic that research into spoken British English is still using a corpus

from the early 1990s to explore ‘present-day’ English. The reason why no spoken corpus since

the Spoken BNC1994 has equalled its utility for research seems to be that no other corpus has

matched all four of its key strengths:

i. orthographically transcribed data

ii. large size

iii. general coverage of spoken British English

iv. (low or no cost) public access

Each of the other projects mentioned above fails to fulfil one or more of these criteria. For

example, CANCODE is large and general in coverage of varieties of spoken British English, but

has no public access; while the SCOTS corpus is publicly-accessible, but contains only Scottish

English. The BBC Voices project, while general in coverage, is not transcribed. The Spoken

BNC2014 is the first corpus since the original that matches all four of the key criteria.

The point has been made that the age of the Spoken BNC1994 is a problem. The

problem of the Spoken BNC1994’s continued use would be lessened if it were not still treated as

a proxy for present-day English – i.e. if its use were mainly historical – but this is not the case.

For researchers interested in spoken British English who do not have access to privately held

spoken corpora this is unavoidable; the Spoken BNC1994 is still clearly the best publicly-

accessible resource for spoken British English for the reasons outlined. Yet, as time has passed,

the corpus has been used for purposes for which it is becoming increasingly less suitable. For

example, a recent study by Hadikin (2014), which investigates the behaviour of articles in spoken

Korean English, uses the Spoken BNC1994 as a reference corpus of present-day English.

Appropriately, Hadikin (2014: 7) gives the following warning:

With notably older recordings [than the Korean corpora he compiled] […] one has to be

cautious about any language structures that may have changed, or may be changing, in

the period since then.

12

In this respect, Hadikin’s (2014) work typifies a range of recent research which, in the absence of

a suitable alternative, uses the Spoken BNC1994 as a sample of present-day English. The dated

nature of the Spoken BNC1994 is demonstrated by the presence in the corpus of references to

public figures, technology, and television shows that were contemporary in the early 1990s:

(1) Oh alright then, so if John Major gets elected then I’ll still [unclear]

14

(BNC1994 KCF)

(2) Why not just put a video on?

15

(BNC1994 KBC)

(3) Did you see The Generation Game?

16

(BNC1994 KCT)

It is clear, then, that there is a need for a new corpus of conversational British English to allow

researchers to continue the kinds of research that the Spoken BNC1994 has fostered over the

past two decades. This new corpus will also make it possible to turn the ageing of the Spoken

BNC1994 into an advantage – if it can be compared to a comparable contemporary corpus, it

can become a useful resource for exploring recent change in spoken English. The Spoken

BNC2014 project enables scholars to realise these research opportunities as well as, importantly,

allowing gratis public access to the resulting corpus.

2.3 Review of research based on spoken English corpora

2.3.1 Introduction

In this section I discuss the types of linguistic research that will likely benefit from the

compilation of the Spoken BNC2014 by reviewing the most common trends in relevant

published research between 1994 – when the Spoken BNC1994 was first released – and 2016. In

section 2.3.2, I review research published in five of the most prominent journals in the field of

corpus linguistics – research that mainly required open-access spoken corpora, or at least spoken

corpora with affordable licences, in order to be completed. In section 2.3.3, I assess the role of

spoken corpora in the development of English grammars, a process in which the question of

availability to the public is irrelevant in some cases as the publisher allows the authors access to

spoken resources they possess. I conclude that there are limitations in the research, caused by the

14

John Major was Prime Minister of the United Kingdom between 1990 and 1997.

15

The VHS tape cassette, or ‘video’, was a popular medium for home video consumption in the 1980s and 1990s

before the introduction of the DVD in the late 1990s.

16

The Generation Game was a popular British television gameshow which was broadcast between 1971 and 2002.

13

problems outlined in the previous section, which could be addressed by the compilation of the

Spoken BNC2014.

2.3.2 Corpus linguistics journals

The aim of this section is to critically discuss a wide-ranging selection of published

research based on spoken corpora (of conversational British English and other language

varieties). The purpose of this is to discuss the extent to which:

(a) spoken corpora have been found to be crucial to the advancement of knowledge in a

range of areas of research;

(b) there are avenues of research that could be ‘updated’ by new spoken data; and,

therefore,

(c) there is reason to compile a new corpus of modern-day British English conversation.

In the case of the Spoken BNC1994, Leech (1993: 10) predicted that the corpus would be

particularly useful for “linguistic research, reference publishing, natural language processing by

computer, and language education”. This section aims to assess how this corpus, and other

corpora containing spoken data, has actually been put to use in published research. To do this I

searched the archives of five of the most popular journals which publish research in corpus

linguistics

17

(the dates in parentheses refer to the year in which the journals were established):

• International Computer Archive of Modern and Medieval English (ICAME) Journal (1979-)

• Digital Scholarship in the Humanities (DSH) Journal (formerly Literary and Linguistic

Computing) (1986-)

• International Journal of Corpus Linguistics (IJCL) (1996-)

• Corpus Linguistics and Linguistic Theory (CLLT) Journal (2005-)

• Corpora Journal (2006-)

For the ICAME and DSH journals, I considered only the volumes that were published from the

year 1994 and onwards; for the other three journals I searched all volumes. I chose 1994 as the

starting point because it is year that the BNC1994 was completed and began to be made publicly

17

By selecting only the output of five journals from the years 1994-2016, I am ignoring the many other outlets,

including monographs, edited collections and conference proceedings, that contain original spoken corpus research.

The purpose of this choice is simply to illustrate the ways in which existing spoken corpora have been used, and to

attempt to predict the types of research for which the Spoken BNC2014 may be used.

14

available for research (Burnard 2002: 10). Since the Spoken BNC1994 was “the first corpus of its

size to be made widely available” (Burnard 2002: 4), corpus research into spoken language prior

to the BNC1994’s publication used smaller bodies of data that were harder to access from

outside their host institution. Such research, therefore, is harder to verify and compare to more

recent spoken corpus studies, and so 1994 was the most appropriate place to start my search.

I retrieved papers that draw upon spoken corpora containing conversational data,

omitting those that drew upon data which would be considered exclusively task-oriented (in

Section 3.1, p. 22, I explain the rationale behind our choice to gather only conversational data for

the Spoken BNC2014). However, articles that draw upon corpora containing both types (e.g. the

Spoken BNC1994) were included. Likewise, I included research that used a mixture of spoken

and written corpora; the findings from the spoken data were considered relevant to my aim (I

found that many articles use corpora of written and spoken data together as a whole). The

following review is based upon the research of a total of 140 papers, published by the five

journals between the years 1994-2016. As shown in Table 1, this represents 14.23% of all articles

(excluding editorials and book reviews) published by these journals in this period, and the

highest relative frequency of articles selected for this review was generated by Corpus Linguistics

and Linguistic Theory (20.93%).

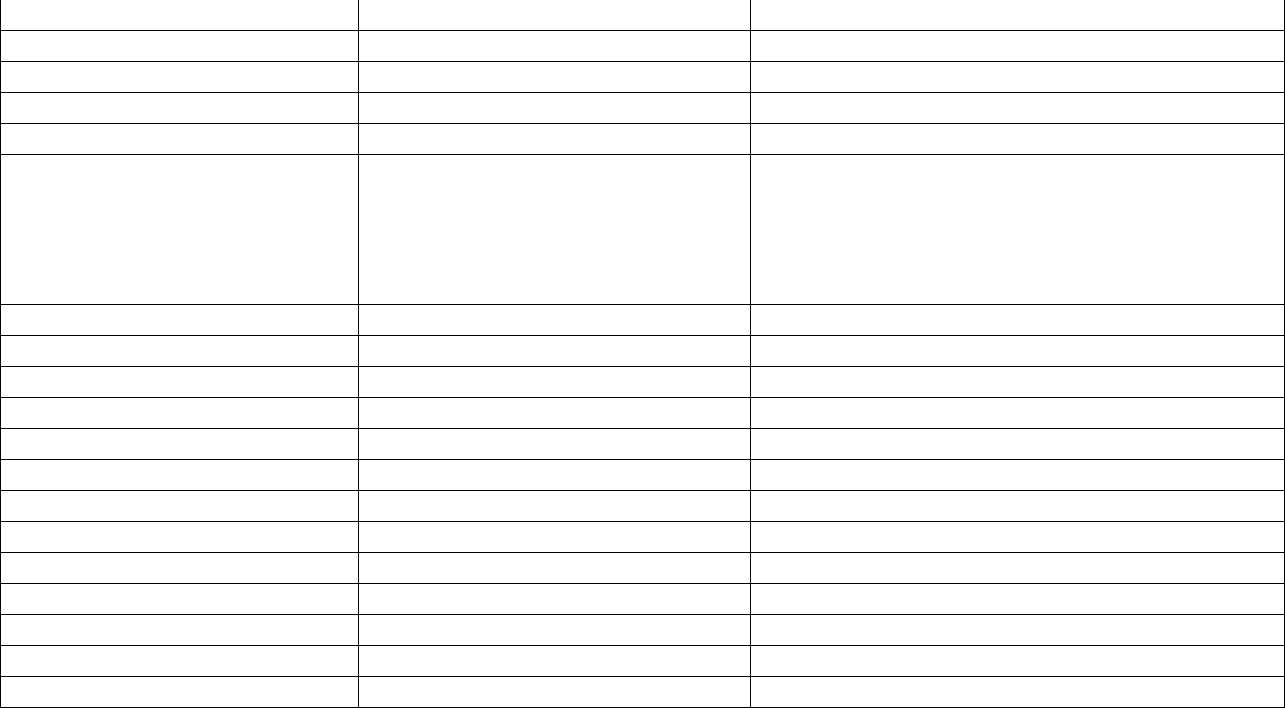

Table 1. Proportion of selected articles relative to the total number of articles published by each

journal between 1994 and 2016.

Journal

Freq. selected

articles

Freq. articles

(1994-2016)

% of all articles

(1994-2016)

ICAME

23

125

18.40

DSH

7

707

0.99

IJCL

69

372

18.55

CLLT

27

129

20.93

Corpora

14

114

12.28

Total

140

1447

14.23

Firstly, it is evident that spoken data has been relied upon in a considerable proportion of

(corpus) linguistic research; based on the research articles retrieved in my search, much appears

to have been said about the nature of spoken language. Secondly, it appears that research is

dominated by the English language; 117 out of the 140 studies use at least one corpus containing

some variety of English. Of these, 77% use British English data. It is not clear whether this

reflects in part the early domination of British English in the field of English Corpus Linguistics

(ECL) (McEnery & Hardie 2012: 72), or whether these journals happen to favour publishing

15

research which includes British English data, or both. Despite this, there has been considerable

research on spoken corpora of other languages, including:

• Belgian Dutch (Grondelaers & Speelman 2007)

• Brazilian Portuguese (Berber Sardinha et al. 2014)

• Columbian Spanish (Brown et al. 2014)

• Danish (Henrichsen & Allwood 2005, Gregersen & Barner-Rasmussen 2011)

• Dutch (Mortier & Degand 2009, Defranq & de Sutter 2010, van Bergen & de Swart

2010, Tummers et al. 2014, Rys & de Cuypere 2014, Hanique et al. 2015)

• Finnish (Karlsson 2010, Helasvuo & Kyröläinen 2016)

• French (Mortier & Degand 2009, Defranq & de Sutter 2010)

• German (Karlsson 2010, Schmidt 2016)

• Korean (Kang 2001, Oh 2005, Kim 2009, Hadikin 2014)

• Mandarin Chinese (Tseng 2005, Xiao & McEnery 2006, Wong 2006)

• Māori (King et al. 2011)

• Nepali (Hardie 2008)

• Norwegian (Drange et al. 2014)

• Russian (Janda et al. 2010)

• Slovene (Verdonik 2015)

• Spanish (Sanchez & Cantos-Gomez 1997, Butler 1998, Trillo & García 2001, de la Cruz

2003, Biber et al. 2006, Santamaría-García 2011, Drange et al. 2014)

• Swedish (Henrichsen & Allwood 2005, Karlsson 2010)

Overall, the studies can be categorised into several general areas of linguistic research,

including, most prominently (numbers in brackets indicate frequency):

• cognitive linguistics (6),

• discourse analysis/conversation analysis (15),

• grammar (59),

• language teaching/language acquisition (9),

• lexical semantics (17),

• sociolinguistics (17).

16

Other less frequently occurring linguistic sub-disciplines include pragmatics (e.g. Ronan 2015),

morphology (e.g. Moon 2011) and – emerging only recently (since 2014) – phonetics (e.g.

Fromont & Watson 2016), as well as methodological papers about corpus/tool construction (e.g.

Andersen 2016, Jacquin 2016, Schmidt 2016). Together, these areas seem to generally match with

the predictions of Leech (1993). However, it is apparent that the findings of some groups of

papers work together coherently towards some shared goal, whereas others appear to select one

aspect of language and report on its use, filling gaps in knowledge as they are identified. Starting

with those which do appear to have achieved coherence, I will describe this difference with

examples.

The first area which does appear to produce sets of research that link clearly between one

another is the application of corpus data in cognitive/psycho-linguistic research. Interestingly

this is an area for which Leech (1993) did not predict spoken corpora such as the Spoken

BNC1994 would be of use. Articles reporting in this area of research did not become prominent

in the journals considered here until the second half of the 2000s. Typically, these studies present

comparisons of traditionally-elicited data with frequency information from large representative

corpora. For example, Mollin (2009) found little comparability between word association

responses from psycholinguistic elicitation tests and collocations of the same node words in the

BNC1994. She concluded that despite the value of elicitation data, it can say “little about

language production apart from the fact that it is a different type of task” (Mollin 2009: 196).

Likewise, McGee (2009) carried out a contrastive analysis of adjective-noun collocations using

the BNC1994 and the intuitions of English language lecturers, generating similar results. It seems

that the shared goal of investigating the relationship between elicitation and corpus data is the

driving motivation for both pieces of research, irrespective of domain or topic.

A similar claim can be made of language teaching. It seems that the overarching effort is

to assess the extent to which corpus data can be used in the teaching of language. Because of this

clearly defined aim, it is not difficult to see how individual studies relate to one another. Grant

(2005) investigated the relationship between a set of widely attested so-called ‘core idioms’ and

their frequency in the BNC1994, finding that none of the idioms occurred within the top 5,000

most frequent words in the BNC1994. She concluded, however, that corpus examples of idioms

would be useful in pedagogy to better equip learners for encountering idiomatic multi-word units

and the common contexts in which they occur (Grant 2005: 448). Likewise Shortall (2007)

compared the representation of the present perfect in ELT textbooks with its occurrence in the

spoken component of the Bank of English. He found that textbooks did not adequately

represent the actual use of the present perfect (based on corpus frequency), but that “pedagogic

17

considerations may sometimes override” this reality for the purpose of avoiding over-

complication in teaching (Shortall 2007: 179). Though these studies do select individual features,

both investigations appear to contribute convincingly towards a shared goal.

Other streams of research do not appear to have such explicitly stated shared

applications; however, the broader value of the research is implicitly understood by the research

community. For example, it seems that the study of spoken grammar is very popular (42% of the

articles in my review). These articles tend to produce findings about a variety of seemingly

unrelated features, filling gaps in knowledge about grammar. There is less of an explicitly stated

shared purpose than the previous areas described. For example, research papers based on the

BNC1994 have analysed features such as adjective order (Wulff 2003), -wise viewpoint adverbs

(Cowie 2006), pre-verbal gerund pronouns (Lyne 2006), the get-passive (Rühlemann 2007),

embedded clauses (Karlsson 2007), future progressives (Nesselhauf & Römer 2007), progressive

passives (Smith & Rayson 2007), quotative I goes (Rühlemann 2008), linking adverbials (Liu

2008), catenative constructions (Gesuato & Facchinetti 2011), dative alternation (Theijssen et al.

2013), satellite placement (Rys & de Cuypere 2014) and pronouns (Timmis 2015, Tamaredo &

Fanego 2016).

Likewise, research papers that address sociolinguistic variation seem to report on a wide

range of features. For example, using the Spoken BNC1994’s own domain classifications,

Nokkonen (2010) analysed the sociolinguistic variation of need to. Säily (2011) considered

variation in terms of morphological productivity, while the filled pauses uh and um were

investigated in both the BNC1994 and the London-Lund Corpus (Tottie 2011). Evidently there

is little relation between studies such as these, at least in terms of their results.

The motivation behind choosing a particular feature appears to be that of gap-filling;

finding some feature of language that has yet to be investigated in a certain way and laying claim

to the ‘gap’ at the beginning of the article. Take, for example, the opening paragraph of

Nesselhauf and Römer (2007: 297, emphasis added):

While the lexical-grammatical patterns of the future time expressions will, shall and going to

have received a considerable amount of attention, particularly in recent years […] the

patterns of the progressive with future time reference (as in He’s arriving tomorrow) have

hardly been empirically investigated to date. […] In this paper, we would like to

contribute to filling this gap by providing a comprehensive corpus-driven analysis of

lexical-grammatical patterns of the progressive with future time reference in spoken

British English.

18

Here the justification for the study is simply that the future progressive has been the subject of

little research thus far. Although there is no explicit discussion of how the investigation of the

future progressive would contribute valuably to some body of knowledge about grammar, it is

clear in this and the other studies considered that a broader understanding of language is being

contributed to by this work.

2.3.3 Grammars

A limitation of my study of journal articles is that I only considered research articles from

five journals, published since 1994. It could be that my observation of largely isolated pieces of

grammatical research, for example, is more a characteristic of journal publication rather than the

state of affairs for research on spoken grammar. In this respect, it is important to consider how

larger, potentially more comprehensive bodies of grammatical research have used spoken corpus

data as evidence. In this section I take three recently published English language grammars and

discuss their use of spoken corpora.

• The Cambridge Grammar of the English Language (Huddleston & Pullum 2002)

This is a “synchronic, descriptive grammar of general-purpose, present-day, international

Standard English” (Huddleston & Pullum 2002: 2). It drew upon the intuitions of its

contributors, consultations with other native speakers of English, dictionary data, academic

research on grammar and, most relevantly, corpus data (Huddleston & Pullum 2002: 11).

However, according to Huddleston and Pullum (2002: 13), it used:

• The Brown corpus (American English),

• The LOB corpus (British English),

• The Australian Corpus of English (ACE), and

• The Wall Street Journal Corpus.