The International Handbook of

School Effectiveness Research

The International Handbook of

School Effectiveness Research

Charles Teddlie and David Reynolds

London and New York

First published 2000 by Falmer Press

11 New Fetter Lane, London EC4P 4EE

Simultaneously published in the USA and Canada

by Falmer Press

19 Union Square West, New York, NY 10003

This edition published in the Taylor & Francis e-Library, 2003.

Falmer Press is an imprint of the Taylor & Francis Group

© 2000 C.Teddlie and D.Reynolds

Cover design by Caroline Archer

All rights reserved. No part of this book may be reprinted or

reproduced or utilized in any form or by any electronic,

mechanical, or other means, now known or hereafter

invented, including photocopying and recording, or in any

information storage or retrieval system, without permission in

writing from the publishers.

British Library Cataloguing in Publication Data

A catalogue record for this book is available from the British Library

Library of Congress Cataloging in Publication Data

A catalogue record for this book has been requested

ISBN 0-203-45440-5 Master e-book ISBN

ISBN 0-203-76264-9 (Adobe eReader Format)

ISBN 0-750-70607-4 (pbk)

v

Contents

List of Figures vii

List of Tables viii

Preface x

Acknowledgments xii

SECTION 1

The Historical and Intellectual Foundations of School Effectiveness

Research 1

1 An Introduction to School Effectiveness Research 3

DAVID REYNOLDS AND CHARLES TEDDLIE, WITH BERT CREEMERS, JAAP

SCHEERENS AND TONY TOWNSEND

2 Current Topics and Approaches in School Effectiveness Research:

The Contemporary Field 26

CHARLES TEDDLIE AND DAVID REYNOLDS, WITH SHARON POL

SECTION 2

The Knowledge Base of School Effectiveness Research 53

3 The Methodology and Scientific Properties of School Effectiveness

Research 55

CHARLES TEDDLIE, DAVID REYNOLDS AND PAM SAMMONS

4 The Processes of School Effectiveness 134

DAVID REYNOLDS AND CHARLES TEDDLIE

5 Context Issues Within School Effectiveness Research 160

CHARLES TEDDLIE, SAM STRINGFIELD AND DAVID REYNOLDS

Contents

vi

SECTION 3

The Cutting Edge Issues of School Effectiveness Research 187

6 Some Methodological Issues in School Effectiveness Research 189

EUGENE KENNEDY AND GARRETT MANDEVILLE

7 Linking School Effectiveness and School Improvement 206

DAVID REYNOLDS AND CHARLES TEDDLIE, WITH DAVID HOPKINS AND

SAM STRINGFIELD

8 School Effectiveness: The International Dimension 232

DAVID REYNOLDS

9 School Effectiveness and Education Indicators 257

CAROL FITZ-GIBBON AND SUSAN KOCHAN

10 Theory Development in School Effectiveness Research 283

BERT CREEMERS, JAAP SCHEERENS AND DAVID REYNOLDS

SECTION 4

The Future of School Effectiveness Research 299

11 School Effectiveness Research and the Social and Behavioural Sciences 301

CHARLES TEDDLIE AND DAVID REYNOLDS

12 The Future Agenda for School Effectiveness Research 322

DAVID REYNOLDS AND CHARLES TEDDLIE

Notes on contributors 344

References 345

Index 397

vii

List of Figures

1.1 Stages in the evolution of SER in the USA 5

7.1 The relationship between school and classroom conditions 221

12.1 Sociometric analysis of an effective school 328

12.2 GCSE average points score versus per cent of pupils entitled to FSM

(1992) 334

12.3 GCSE average points score versus per cent of pupils entitled to FSM

(1993) 335

12.4 High School achievement: Spring 1993 336

12.5 High School achievement: Spring 1994 337

viii

List of Tables

1.1 Dutch school effectiveness studies: Total number of positive and

negative correlations between selected factors and educational

achievement 20

2.1 Respondents’ ratings of importance of each topic from first round

survey 30

2.2 Descriptive summary of respondents’ rankings of topics from second

round survey 33

2.3 Topic loadings from factor analysis of responses of second round

participants: three factor solution 34

2.4 Topic loadings from factor analysis of responses of second round

participants: one factor solution 35

2.5 Average rank scores given by scientists and humanists to topics 36

2.6 Comparison of rankings for all respondents, humanistic and scientific 37

2.7 Average rank scores given by humanists, pragmatists and scientists to

topics 38

2.8 Key topic areas in school effectiveness research 50

3.1 Scientific properties of school effects cross listed with methodological

issues in educational research 56

3.2 Percentage of variance in students’ total GCSE performance and

subject performance due to school and year 125

3.3 Correlations between estimates of secondary schools’ effects on

different outcomes at GCSE over three years 125

3.4 Variance components cross-classified model for total GCSE

examination performance score as response 132

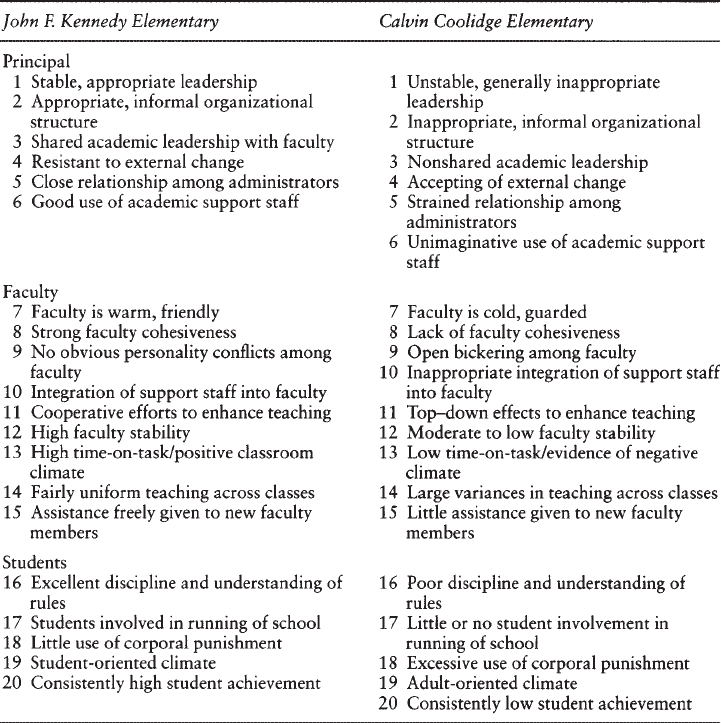

4.1 Contrasts between Kennedy and Coolidge elementary schools 138

4.2 Effective schools’ characteristics identified in two recent reviews 142

4.3 The processes of effective schools 144

5.1 Comparison of methodologies used in seven school effects studies

with context variables 161

5.2 Contextual differences in elementary schools due to urbanicity 176

7.1 Characteristics of two school improvement paradigms 214

7.2 A framework for school improvement: some propositions 221

8.1 An overview of recent Dutch school effectiveness studies 235

8.2 Variable names, descriptions, means and standard deviations for

Nigeria and Swaziland 236

List of Tables

ix

8.3 Factors associated with effectiveness in different countries 238

8.4 Factors affecting achievement in developing societies 240

8.5 Percentage correct items for various countries (IAEP Maths) 243

8.6 Overall average percentage correct of all participants (IAEP Science) 244

8.7 The IEA and IAEP international studies of educational achievement 245

8.8 Variance explained within countries 249

8.9 Average Likert ratings of case study dimensions by country type 254

8.10 Average rank orders of case study dimensions by country type 254

8.11 Variance components computation of ISERP county level data 255

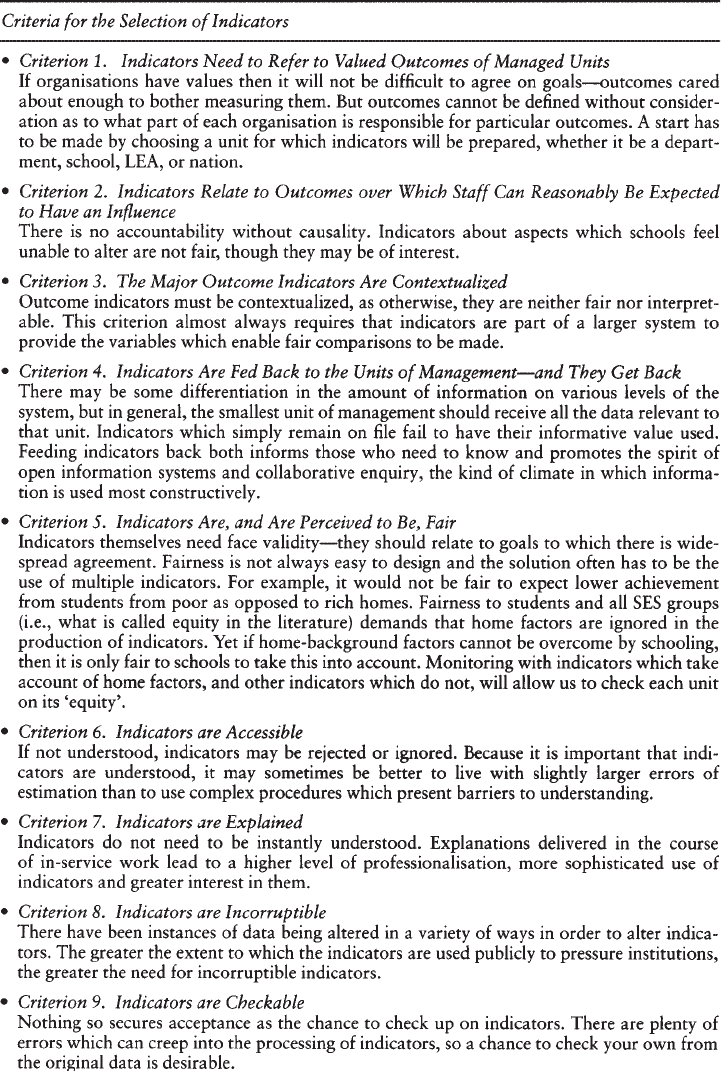

9.1 Indicator selection criteria 260

9.2 A classification of types of indicator 262

9.3 The four OECD networks for indicators 270

12.1 Comparison of variance in scores on teacher behaviour for effective

versus ineffective schools 331

x

Preface

School effectiveness research, and school effectiveness researchers, are now central to

the educational discourse of many societies, a situation which represents a marked

change to that of or 20 years ago. Then, there was an overwhelming sense of pessimism

as to the capacity of the educational system to improve the life chances and

achievements of children, since their home background was regarded as limiting their

potential, in a quite deterministic fashion. Now, the belief that effective schools can

have positive effects and the practices associated with effective schooling are in existence

within most countries’ educational communities of practitioners, researchers and

policymakers.

In spite of the centrality of the discipline, it has long been clear to us that there had

been no attempt to compile the ‘normal science’ of the discipline and record its

knowledge base in a form that enables easy access. Perhaps because of a lingering

ethno-centrism in the research community, there was also a tendency for the school

effectiveness community in different countries to miss the achievements and the

perspectives of those in societies other than their own.

The result of this was that people wanting to access the effectiveness knowledge

base found this difficult and probably ended up at a lower level of understanding

than was good for them, or for the discipline. The result was also that national

perspectives on the knowledge base predominated, rather than the international ones

that predominate in most intellectual traditions.

It was to eliminate these ‘downsides’, and to gain the ‘upside’ of clear disciplinary

foundations, that we decided to write this Handbook. It has taken us six years to

complete and has involved the addition of many friends and colleagues to the writing

task in areas where we have needed specialist help. We are particularly grateful also

to the following colleagues for their helpful comments on our final draft:

Bert Creemers

Peter Daly

Peter Hill

David Hopkins

Peter Mortimore

Pam Sammons

Jaap Scheerens

Louise Stoll

Sam Stringfield

Preface

xi

Sally Thomas

Tony Townsend

Peter Tymms

We are also very grateful to Avril Silk who has typed successive versions of the book

with speed and accuracy.

The discipline is now moving so rapidly, however, that we suspect that this first

edition of the Handbook will need to be followed by further editions to keep pace

with the volume of material. If there is new material that colleagues in the field have

produced, and/or if there is any existing material that we have missed in this edition

of the Handbook, please let us know at the address/numbers below.

The sooner that the Handbook becomes the useful property of the discipline as a

whole, the happier we will both be.

Charles Teddlie

David Reynolds

Professor Charles Teddlie

Department of ELRC

College of Education

111 Peabody Hall

Louisiana State University

Baton Rouge, LA 70803 4721

USA

Tel: (225) 388 6840

Fax: (225) 388 6918

E-mail: [email protected]

Professor David Reynolds

Department of Education

University of Newcastle upon Tyne

St Thomas Street

Newcastle upon Tyne

UK

Tel: +44 (191) 2226598

Fax: +44 (191) 2225092

E-mail: A[email protected]

xii

Acknowledgments

The authors would like to thank the following for their kind permission to reprint

their work:

Table 1.1 Creemers, B.P.M. and Osinga, N. (eds) (1995) ICSEI Country Reports,

Leeuwarden, Netherlands: ICSEI Secretariat.

Tables 3.2 and 3.3 Thomas, S., Sammons, P. and Mortimore, P. (1995a) ‘Determining

what adds value to student achievement’, Educational Leadership International, 58,

6, pp.19–22.

Table 3.4 Goldstein, H. and Sammons, P. (1995) The Influence of Secondary and

Junior Schools on Sixteen Year Examination Performance: A Cross-classified Multilevel

Analysis, London: ISEIC, Institution of Education.

Table 4.1 and 5.2 Teddlie, C. and Stringfield, S. (1993) Schools Do Make a Difference: Lessons

Learned from a 10-year Study of School Effects, New York: Teachers College Press.

Tables 4.2 and 4.3 Levine, D.U. and Lezotte, L.W. (1990) Unusually Effective Schools:

A Review and Analysis of Research and Practice, Madison, WI: National Center for

Effective Schools Research and Development; Sammons, P., Hillman, J. and Mortimore,

P. (1995b) Key Characteristics of Effective Schools: A Review of School Effectiveness

Research, London: Office for Standards in Education and Institute of Education.

Figure 7.1 and Table 7.2 Hopkins, D. and Ainscow, M. (1993) ‘Making sense of

school improvement: An interim account of the IQEA Project’, Paper presented to the

ESRC Seminar Series on School Effectiveness and School Improvement, Sheffield.

Tables 8.1, 8.3 and 8.8 Scheerens, I., Vermeulen, C.J. and Pelgrum, W.J. (1989c)

‘Generalisability of school and instructional effectiveness indicators across nations’, in

Creemers, B.P.M. and Scheerens, J. (eds) Developments in School Effectiveness Research,

special issue of International Journal of Educational Research, 13, 7, pp.789–99.

Table 8.2 Lockheed, M.E. and Komenan, A. (1989) ‘Teaching quality and student

achievement in Africa: The case of Nigeria and Swaziland, Teaching and Teacher

Education, 5, 2, pp.93–113.

Acknowledgments

xiii

Table 8.4 Fuller, B. (1987) ‘School effects in the Third World’, Review of Educational

Research, 57, 3, pp.255–92.

Tables 8.5 and 8.6 Foxman, D. (1992) Learning Mathematics and Science (The Second

International Assessment of Educational Progress in England), Slough: National

Foundation for Educational Research.

Table 9.1 Fitz-Gibbon, C.T. (1996) Monitoring Education: Indicators, Quality and

Effectiveness, London, New York: Cassell.

Section 1

The Historical and

Intellectual Foundations of

School Effectiveness Research

3

1 An Introduction to School

Effectiveness Research

David Reynolds and Charles Teddlie,

with Bert Creemers, Jaap Scheerens

and Tony Townsend

Introduction

School effectiveness research (SER) has emerged from virtual total obscurity to a now

central position in the educational discourse that is taking place within many countries.

From the position 30 years ago that ‘schools make no difference’ that was assumed to

be the conclusions of the Coleman et al. (1966) and Jencks et al. (1971) studies, there

is now a widespread assumption internationally that schools affect children’s

development, that there are observable regularities in the schools that ‘add value’ and

that the task of educational policies is to improve all schools in general, and the more

ineffective schools in particular, by transmission of this knowledge to educational

practitioners.

Overall, there have been three major strands of school effectiveness research (SER):

• School Effects Research—studies of the scientific properties of school effects

evolving from input-output studies to current research utilizing multilevel models;

• Effective Schools Research—research concerned with the processes of effective

schooling, evolving from case studies of outlier schools through to contemporary

studies merging qualitative and quantitative methods in the simultaneous study of

classrooms and schools;

• School Improvement Research—examining the processes whereby schools can be

changed utilizing increasingly sophisticated models that have gone beyond simple

applications of school effectiveness knowledge to sophisticated ‘multiple lever’ models.

In this chapter, we aim to outline the historical development of these three areas of

the field over the past 30 years, looking in detail at the developments in the United

States where the field originated and then moving on to look at the United Kingdom,

the Netherlands and Australia, where growth in SER began later but has been

particularly rapid. We then attempt to conduct an analysis of how the various phases

of development of the field cross nationally are linked with the changing perceptions

of the educational system that have been clearly visible within advanced industrial

societies over the various historic ‘epochs’ that have been in evidence over these last

30 years. We conclude by attempting to conduct an ‘intellectual audit’ of the existing

school effectiveness knowledge base and its various strengths and weaknesses

The International Handbook of School Effectiveness Research

4

withinvarious countries, as a prelude to outlining in Chapter 2 how we have structured

our task of reviewing all the world’s literature by topic areas across countries.

SER in The United States

Our review of the United States is based upon numerous summaries of the literature,

many of which concentrated on research done during the first 20 years of school

effectiveness research (1966–85). A partial list of reviews of the voluminous literature

on school effects in the USA during this period would include the following: Anderson,

1982; Austin, 1989; Averch et al., 1971; Bidwell and Kasarda, 1980; Borger et al.,

1985; Bossert, 1988; Bridge et al., 1979; Clark, D., et al., 1984; Cohen, M., 1982;

Cuban, 1983; Dougherty, 1981; Geske and Teddlie, 1990; Glasman and Biniaminov,

1981; Good and Brophy, 1986; Good and Weinstein, 1986; Hanushek, 1986; Levine

and Lezotte, 1990; Madaus et al., 1980; Purkey and Smith, 1983; Ralph and Fennessey,

1983; Rosenholtz, 1985; Rowan et al., 1983; Sirotnik, 1985; Stringfield and Herman,

1995, 1996; Sweeney, 1982; Teddlie and Stringfield, 1993.

Figure 1.1 presents a visual representation of four overlapping stages that SER has

been through in the USA:

• Stage 1, from the mid-1960s and up until the early 1970s, involved the initial

input-output paradigm, which focused upon the potential impact of school human

and physical resources upon outcomes;

• Stage 2, from the early to the late 1970s, saw the beginning of what were commonly

called the ‘effective schools’ studies, which added a wide range of school processes

for study and additionally looked at a much wider range of school outcomes than

the input-output studies in Stage 1;

• Stage 3, from the late 1970s through the mid-1980s, saw the focus of SER shift

towards the attempted incorporation of the effective schools ‘correlates’ into schools

through the generation of various school improvement programmes;

• Stage 4, from the late 1980s to the present day, has involved the introduction of

context factors and of more sophisticated methodologies, which have had an

enhancing effect upon the quality of all three strands of SER (school effects research,

effective schools research, school improvement research).

We now proceed to analyse these four stages in detail.

Stage 1: The Original Input-Output Paradigm

Stage 1 was the period in which economically driven input-output studies

predominated. These studies (designated as Type 1A studies in Figure 1.1) focused on

inputs such as school resource variables (e.g. per pupil expenditure) and student

background characteristics (variants of student socio-economic status or SES) to predict

school outputs. In these studies, school outcomes were limited to student achievement

on standardized tests. The results of these studies in the USA (Coleman et al., 1966;

Jencks et al., 1972) indicated that differences in children’s achievement were more

strongly associated with societally determined family SES than with potentially

malleable school-based resource variables.

Figure 1.1 Stages in the evolution of SER in the USA

The International Handbook of School Effectiveness Research

6

The Coleman et al. (1966) study utilized regression analyses that mixed levels of

data analysis (school, individual pupil) and concluded that ‘schools bring little influence

to bear on a child’s achievement that is independent of his background and general

social context’ (p.325). Many of the Coleman school factors were related to school

resources (e.g. per pupil expenditure, school facilities, number of books in the library),

which were not very strongly related to student achievement. Nevertheless, 5–9 per

cent of the total variance in individual student achievement was uniquely accounted

for by school factors (Coleman et al., 1966). (See Chapter 3 for a more extensive

description of the results from the Coleman Report). Daly noted:

The Coleman et al. (1966) survey estimate of a figure of 9 per cent of variance in

an achievement measure attributable to American schools has been something of

a bench mark, despite the critical reaction.

(Daly, 1995a, p.306)

While there were efforts to refute the Coleman results (e.g. Mayeske et al., 1972;

Mclntosh, 1968; Mosteller and Moynihan, 1972) and methodological flaws were

found in the report, the major findings are now widely accepted by the educational

research community. For example, the Mayeske et al. (1972) re-analysis indicated

that 37 per cent of the variance was between schools, but they found that much of

that variance was common to both student background variables and school

variables. The issue of multicollinearity between school and family background

variables has plagued much of the research on school effects, and is a topic we

return to frequently later.

In addition to the Coleman and Jencks studies, there were several other studies

conducted during this time within a sociological framework known as the ‘status-

attainment literature’ (e.g. Hauser, 1971; Hauser et al., 1976). The Hauser studies,

conducted in high schools in the USA, concluded that variance between schools was

within the 15–30 per cent range and was due to mean SES differences, not to

characteristics associated with effective schooling. Hauser, R., et al. (1976) estimated

that schools accounted for only 1–2 per cent of the total variance in student achievement

after the impact of the aggregate SES characteristics of student bodies was controlled

for statistically.

As noted by many reviewers (e.g. Averch et al., 1971; Brookover et al., 1979;

Miller, 1983), these early economic and sociological studies of school effects did not

include adequate measures of school social psychological climate and other classroom/

school process variables, and their exclusion contributed to the underestimation of

the magnitude of school effects. Averch et al. (1971) reviewed many of these early

studies and concluded that student SES background variables were the only factors

consistently linked with student achievement. This conclusion of the Averch review

was qualified, however, by the limitations of the extant literature that they noted,

including: the fact that very few studies had actually measured processes (behavioural

and attitudinal) within schools, that the operational definitions of those processes

were very crude, and that standardized achievement was the only outcome measure

(Miller, 1983).

An Introduction to School Effectiveness Research

7

Stage 2: The Introduction of Process Variables and Additional Outcome

Variables into SER

The next stage of development in SER in the USA involved studies that were conducted

to dispute the results of Coleman and Jencks. Some researchers studied schools that

were doing exceptional jobs of educating students from very poor SES backgrounds

and sought to describe the processes ongoing in those schools. These studies are

designated as Type 2A links in Figure 1.1. In these Type 2A studies, traditional input

variables are subsumed under process variables (e.g. available library resources become

part of the processes associated with the library). These studies also expanded the

definition of the outputs of schools to include other products, such as were measured

by attitudinal and behavioural indicators.

The earlier studies in this period were focused in urban, low-SES, elementary schools

because researchers believed that success stories in these environments would dispel

the belief that schools made little or no difference. In a classic study from this period,

Weber (1971) conducted extensive case studies of four low-SES inner-city schools

characterized by high achievement at the third grade level. His research emphasized

the importance of the actual processes ongoing at schools (e.g. strong leadership, high

expectations, good atmosphere, and a careful evaluation of pupil progress), while the

earlier studies by Coleman and Jencks had focused on only static, historical school

resource characteristics.

Several methodological advances occurred in the USA literature during the decade

of the 1970s, such as the inclusion of more sensitive measures of classroom input, the

development of social psychological scales to measure school processes, and the

utilization of more sensitive outcome measures. These advances were later incorporated

in the more sophisticated SER of both the USA and of other countries in the 1990s.

The inclusion of more sensitive measures of classroom input in SER involved the

association of student-level data with the specific teachers who taught the students.

This methodological advance was important for two reasons: it emphasized input

from the classroom (teacher) level, as well the school level; and it associated student-

level output variables with student-level input variables, rather than school-level input

variables.

The Bidwell and Kasarda (1980) review of school effects concluded that some

positive evidence was accumulating in studies that measured ‘school attributes at a

level of aggregation close to the places where the work of schooling occurs’ (p.403).

Their review highlighted the work of Murnane (1975) and Summers and Wolfe (1977),

both of which had painstakingly put together datasets in which the inputs of specific

teachers were associated with the particular students that they had taught. (The Bidwell

and Kasarda review also concluded that studies including information on curricular

track (e.g. Alexander, K., and McDill, 1976; Alexander, K., et al., 1978; Heyns, 1974)

were providing valuable information on the potential impact of schooling on

achievement.)

The research of Summers and Wolfe (1977), Murnane (1975), and others (e.g.

Armor et al., 1976; Winkler, 1975) demonstrated that certain characteristics of

classroom teachers were significantly related to the achievement of their students.

The Summers and Wolfe (1977) study utilized student-level school inputs, including

characteristics of the specific teachers who taught each student. The researchers were

The International Handbook of School Effectiveness Research

8

ableto explain around 25 per cent of the student level variance in gain scores using a

mixture of teacher and school level inputs. Summers and Wolfe did not report the

cumulative effect for school factors as opposed to SES factors, but the quality of

college the teachers attended was a significant predictor of students’ learning rate (as

it was in Winkler, 1975).

Murnane’s (1975) research indicated that information on classroom and school

assignments increased the amount of predicted variance in student achievement by 15

per cent in regression models in which student background and prior achievement

had been entered first. Principals’ evaluations of teachers was also a significant predictor

in this study (as it was in Armor et al., 1976) (see Chapter 3 for more details regarding

both the Summers and Wolfe and the Murnane studies).

Later reviews by Hanushek (1981, 1986) indicated that teacher variables that are

tied to school expenditures (e.g. teacher-student ratio, teacher education, teacher

experience, teacher salary) demonstrated no consistent effect on student achievement.

Thus, the Coleman Report and re-analyses (using school financial and physical

resources as inputs) and the Hanushek reviews (assessing teacher variables associated

with expenditures) produced no positive relationships with student achievement.

On the other hand, qualities associated with human resources (e.g. student sense

of control of their environment, principals’ evaluations of teachers, quality of teachers’

education, teachers’ high expectations for students) demonstrated significantly positive

relationships to achievement in studies conducted during this period (e.g. Murnane,

1975; Link and Ratledge, 1979; Summers and Wolfe, 1977; Winkler, 1975). Other

studies conducted in the USA during this period indicated the importance of peer

groups on student achievement above and beyond the students’ own SES background

(e.g. Brookover et al., 1979; Hanushek, 1972; Henderson et al., 1978; Summers and

Wolfe, 1977; Winkler, 1975).

These results led Murnane (1981) to conclude that:

The primary resources that are consistently related to student achievement are

teachers and other students. Other resources affect student achievement primarily

through their impact on the attitudes and behaviours of teachers and students.

(Murnane, 1981, p.33)

The measures of teacher behaviours and attitudes utilized in school effects studies

have evolved considerably from the archived data that Summers and Wolfe (1977)

and Murnane (1975) used. Current measures of teacher inputs include direct

observations of effective classroom teaching behaviours which were identified through

the teacher effectiveness literature (e.g. Brophy and Good, 1986; Gage and Needels,

1989; Rosenshine, 1983).

Another methodological advance from the 1970s concerned the development of

social psychological scales that could better measure the educational processes ongoing

at the school and class levels. These scales are more direct measures of student, teacher,

and principal attitudes toward schooling than the archived data used in the Murnane

(1975) and Summers and Wolfe (1977) studies.

As noted above, a major criticism of the early school effects literature was that

school/classroom processes were not adequately measured, and that this contributed

to school level variance being attributed to family background variables rather than

An Introduction to School Effectiveness Research

9

educational processes. Brookover et al. (1978, 1979) addressed this criticism by using

surveys designed to measure student, teacher, and principal perceptions of school

climate in their study of 68 elementary schools in Michigan. Their work built upon

previous attempts to measure school climate by researchers such as McDill, Rigsby,

and their colleagues, who had earlier demonstrated significant relationships between

climate and achievement (e.g. McDill et al., 1967, 1969; McDill and Rigsby, 1973).

These school climate measures included items from four general sources (Brookover

et al., 1979; Brookover and Schneider, 1975; Miller, 1983):

1 student sense of academic futility, which had evolved from the Coleman et al.

(1966) variable measuring student sense of control and the internal/external locus

of control concept of Rotter (1966);

2 academic self concept, which had evolved in a series of studies conducted by

Brookover and his colleagues from the more general concept of self esteem

(Coopersmith, 1967; Rosenberg, 1965);

3 teacher expectations, which had evolved from the concept of the self fulfilling

prophecy in the classroom (Cooper and Good, 1982; Rosenthal and Jacobsen,

1968), which had in turn evolved from Rosenthal’s work on experimenter bias

effects (Rosenthal, 1968,1976; Rosenthal and Fode, 1963);

4 academic or school climate, which had roots going back to the work of McDill

and Rigsby (1973) on concepts such as academic emulation and academically

oriented status systems and the extensive work on organizational climate (e.g.

Halpin and Croft, 1963; Dreeban, 1973; Hoy et al., 1991).

Brookover and his colleagues developed 14 social psychological climate scales based

on several years of work with these different sources. The Brookover et al. (1978,

1979) study investigated the relationship among these school level climate variables,

school level measures of student SES, school racial composition, and mean school

achievement. Their study also clearly exemplified the thorny problems of

multicollinearity among school climate and family background variables:

1 when SES and per cent white were entered into the regression model first, school

climate variables accounted for only 4.1 per cent of the school level variance in

achievement (the increment from 78.5 per cent to 82.6 per cent of the between

schools variance);

2 when school climate variables were entered into the regression model first, SES

and per cent white accounted for only 10.2 per cent of the school level variance in

achievement (the increment from 72.5 per cent to 82.7 per cent of the between

schools variance);

3 in the model with school climate variables entered first, student sense of academic

futility (the adaptation of the Coleman Report variable measuring student sense

of control) explained about half of the variance in school level reading and

mathematics achievement.

Another methodological advance from the 1970s concerned the utilization of more

sensitive outcome measures (i.e. outcomes more directly linked to the courses or

curriculum taught at the schools under study). Two studies conducted by a group of

The International Handbook of School Effectiveness Research

10

US,English, and Irish researchers (Brimer et al., 1978; Madaus et al., 1979)

demonstrated that the choice of test can have a dramatic effect on results concerning

the extent to which school characteristics affect student achievement. These two studies

utilized data from England (Brimer et al., 1978) and Ireland (Madaus et al., 1979),

where public examinations are geared to separate curricula. As the authors noted,

such examinations do not exist at a national level in the USA, as they do throughout

Europe, and there is also no uniform national curriculum in the USA.

In the Madaus study, between class variance in student level performance on

curriculum specific tests (e.g. history, geography) was estimated at 40 per cent (averaged

across a variety of tests). Madaus and his colleagues further reported that classroom

factors explained a considerably larger proportion of the unique variance (variance

attributable solely to classroom factors) on the curriculum specific tests (an average

of 17 per cent) than on standardized measures (an average of 5 per cent).

Madaus et al. (1979) and others (e.g. Carver, 1975) believed that the characteristics

of standardized tests make them less sensitive than curriculum specific tests to the

detection of differences due to the quality of schools. These standardized tests ‘cover

material that the school teaches more incidentally’ (Coleman et al., 1966, p.294).

Madaus and his colleagues believed that ‘Conclusions about the direct instructional

effects of schools should not have to rely on evidence relating to skills taught

incidentally’ (Madaus, 1979, p.209).

Stage 3: The Equity Emphasis and the Emergence of School

Improvement Studies

The foremost proponent of the equity ideal during Stage 3 of SER in the USA (the

late 1970s through to the mid-1980s) was Ron Edmonds, who took the results of

his own research (Edmonds, 1978, 1979a, 1979b) and that of others (e.g. Lezotte

and Bancroft, 1985; Weber, 1971) to make a powerful case for the creation of

‘effective schools for the urban poor’. Edmonds and his colleagues were no longer

interested in just describing effective schools: they also wished to create effective

schools, especially for the urban poor.

The five factor model generated through the effective schools research included

the following factors: strong instructional leadership from the principal, a pervasive

and broadly understood instructional focus, a safe and orderly school learning

environment or ‘climate’, high expectations for achievement from all students, and

the use of student achievement test data for evaluating programme and school success.

It was at this point that the first school improvement studies (designated as Type

3A designs in Figure 1.1) began to emerge. These studies in many respects eclipsed the

study of school effectiveness in the USA for several years. These early studies (e.g.

Clark, T. and McCarthy, 1983; McCormack-Larkin, 1985; McCormack-Larkin and

Kritek, 1982; Taylor, B., 1990) were based for the most part on models that utilized

the effective schools ‘correlates’ generated from the studies described above. (This

early work and later increasingly more sophisticated school improvement studies are

summarized in Chapter 7.)

This equity orientation, with its emphasis on school improvement and its sampling

biases, led to predictable responses from the educational research community in the

early to mid-1980s. The hailstorm of criticism (e.g. Cuban, 1983,1984; Firestone and

An Introduction to School Effectiveness Research

11

Herriot, 1982a; Good and Brophy, 1986; Purkey and Smith, 1983; Rowan, 1984;

Rowan et al., 1983; Ralph and Fennessey, 1983) aimed at the reform orientation of

those pursuing the equity ideal in SER had the effect of paving the way for the more

sophisticated studies of SER which used more defensible sampling and analysis

strategies.

School context factors were, in general, ignored during the ‘effective schools’

research era in the USA, partially due to the equity orientation of the researchers and

school improvers like Edmonds. This orientation generated samples of schools that

only came from low SES areas, not from across SES contexts, a bias that attracted

much of the considerable volume of criticism that swirled around SER in the mid to

late 1980s. As Wimpelberg et al. note:

Context was elevated as a critical issue because the conclusions about the

nature, behaviour, and internal characteristics of the effective (urban

elementary) schools either did not fit the intuitive understanding that people

had about other schools or were not replicated in the findings of research on

secondary and higher SES schools.

(Wimpelberg et al., 1989, p.85)

Stage 4: The Introduction of Context Factors and Other Methodological

Advances: Towards Normal Science

A new, more methodologically sophisticated era of SER began with the first context

studies (Hallinger and Murphy, 1986; Teddlie et al., 1985, 1990) which explored the

factors that were producing greater effectiveness in middle-class schools, suburban schools

and secondary schools. These studies explicitly explored the differences in school effects

that occur across different school contexts, instead of focusing upon one particular

context. There was also a values shift from the equity ideal to the efficiency ideal that

accompanied these new context studies. Wimpelberg et al. noted that:

the value base of efficiency is a simple but important by-product of the research

designs used in…(this) phase of the effective school research tradition.

(Wimpelberg et al., 1989, p.88)

This new value base was inclusive, since it involved the study of schools serving all

types of students in all types of contexts and emphasized school improvement across

all of those contexts. When researchers were interested solely in determining what

produces effective schools for the urban poor, their value orientation was that of

equity: how can we produce better schools for the disadvantaged? When researchers

began studying schools in a variety of contexts, their value orientation shifted to

efficiency: how can we produce better schools for any and all students?

With regard to Figure 1.1, the introduction of context variables occurred in Stage

4 of the evolution of SER in the USA. The inclusion of context had an effect on the

types of research designs being utilized in all three of the major strands of SER:

1 Within the input-output strand of SER, the introduction of context variables led

to another linkage (1C) in the causal loop (1C-lA-lB-output) depicted in Figure

The International Handbook of School Effectiveness Research

12

1.1. Studies in this tradition may treat context variables as covariates and add

them to the expanding mathematical equations that characterize their analyses

(Creemers and Scheerens, 1994; Scheerens, 1992).

2 Within the process-product strand of SER, the introduction of context variables

led to the 2B links depicted in Figure 1.1 and generated Type 2B-2A studies.

These studies are often described in the literature as contextually sensitive studies

of school effectiveness processes. This type of research design typically utilizes

the case study approach to examine the processes ongoing in schools that vary

both by effectiveness status and at least one context variable (e.g. Teddlie and

Stringfield, 1993).

3 Within the school improvement strand of SER, the introduction of context variables

led to the more sophisticated types of studies described as Type 2B-2A-3A in Figure

1.1. These studies allow for multiple approaches to school change, depending on

the particular context of the school (e.g. Chrispeels, 1992; Stoll and Fink, 1992).

Other methodological advances have occurred in the past decade in the USA, leading

to more sophisticated research across all three strands of SER. The foremost

methodological advance in SER in the USA (and internationally) during this period

was the development of multilevel mathematical models to more accurately assess

the effects of all the units of analysis associated with schooling. Scholars from the

USA (e.g. Alexander, K., et al., 1981; Burstein, 1980a, 1980b; Burstein and Knapp,

1975; Cronbach et al., 1976; Hannan et al., 1976; Knapp, 1977; Lau, 1979) were

among the first to identify the levels of aggregation issue as an issue for educational

research.

One of the first multilevel modelling computer programs was also developed in

the USA (Bryk et al., 1986a, 1986b) at about the same time as similar programs

were under development in the UK (e.g. Longford, 1986). Researchers from the

USA continued throughout this time period to contribute to the further refinement

of multilevel modelling (e.g. Bryk and Raudenbush, 1988, 1992; Lee, V., and Bryk,

1989; Raudenbush, 1986, 1989; Raudenbush and Bryk, 1986, 1987b, 1988;

Raudenbush and Willms, 1991; Willms and Raudenbush, 1989). Results from this

research are highlighted in Chapter 3 in the section on the estimation of the magnitude

of school effects and this entire area of research is reviewed in Chapter 6 of this

handbook.

Other advances have occurred during the past decade in the USA in the

methodological areas addressed earlier under the description of Stage 2. For instance,

several studies conducted over the past decade have related behavioural indices of

teaching effectiveness (e.g. classroom management, teacher instructional style, student

time on task) to school effectiveness (e.g. Crone and Teddlie, 1995; Stringfield et al.,

1985; Teddlie et al., 1989a; Teddlie and Stringfield, 1993; Virgilio et al., 1991). These

studies have demonstrated that more effective teaching occurs at effective as opposed

to ineffective schools, using statistical techniques such as multivariate analysis of

variance.

Researchers have also continued to develop survey items, often including social

psychological indices, in an attempt to better measure the educational processes ongoing

at the school and classroom levels. For example, Rosenholtz (1988, 1989) developed

scales assessing seven different dimensions of the social organization of schools

An Introduction to School Effectiveness Research

13

specifically orientated for use by teachers. Pallas (1988) developed three scales from

the Administrator Teacher Survey of schools in the High School and Beyond study

(Principal leadership, teacher control, and staff cooperation), and these scales have

been used in recent studies of organizational effectiveness (e.g. Rowan, Raudenbush,

and Kang, 1991).

In a similar vein, Teddlie and his colleagues (e.g. Teddlie et al., 1984; Teddlie and

Stringfield, 1993) conducted a large scale study similar to that of Brookover et al.

(1979) in 76 elementary schools during the second phase of the Louisiana School

Effectiveness Study (LSES-II). These researchers utilized the Brookover school climate

scales, analysed the data at the school level, and found results similar to those reported

by Brookover and his colleagues. The researchers utilized second order factor analysis

in an effort to deal with the problems of multicollinearity among the school climate

and family background variables.

The need for more sensitive output measures in SER is still very much a problem

today. Fitz-Gibbon (1991b) and Willms (1985a) have commented on the inadequate

measures of school achievement used in many contemporary school effects studies.

For instance, the often used High School and Beyond database contains a short test

(only 20 items) that is supposed to measure growth in science.

The Current State of SER in the USA

Since the mid-1980s there has been much less activity in school effectiveness research

in the US. In their meta-analysis of multilevel studies of school effects that had been

conducted in the late 1980s and 1990s, Bosker and Witziers (1996) noted how few

studies they were able to identify from the USA compared to those from the UK and

the Netherlands.

There are a number of reasons for this decline in the production of school

effectiveness research in the USA:

1 the scathing criticisms of effective schools research, which led many educational

researchers to steer away from the more general field of school effectiveness research

and fewer students to choose the area for dissertation studies after the mid-1980s

(e.g. Cuban, 1993);

2 several of the researchers who had been interested in studying school effects moved

towards the more applied areas of effective schools research and school

improvement research (e.g. Brookover et al., 1984);

3 other researchers interested in the field moved away from it in the direction of

new topics such as school restructuring and school indicator systems;

4 in the mid-1980s, there was an analytical muddle, with researchers aware of the

units of analysis issue, yet not having commercially available multilevel

programmes, and this situation discouraged some researchers;

5 tests of the economic input-output models failed to find significant relationships

among financially driven inputs and student achievement (e.g. Geske and Teddlie,

1990; Hanushek, 1981, 1986), and research in that area subsided;

6 federal funding for educational research plummeted during Reagan/Bush (1980–

92) administrations (Good, 1989), and departments of education became more

involved in monitoring accountability, rather than in basic research;

The International Handbook of School Effectiveness Research

14

7 communication amongst the SER research community broke down, with the more

‘scientifically’ oriented researchers becoming increasingly involved with the

statistical issues associated with multilevel modelling, rather than with the

educational ramifications of their research.

SER in the United Kingdom

School effectiveness research in the United Kingdom also had a somewhat difficult

intellectual infancy. The hegemony of traditional British educational research, which

was orientated to psychological perspectives and an emphasis upon individuals and

families as determinants of educational outcomes, created a professional educational

research climate somewhat unfriendly to school effectiveness research, as shown by

the initially very hostile British reactions to the classic Rutter et al. (1979) study

Fifteen Thousand Hours (e.g. Goldstein, 1980). Other factors that hindered early

development were:

1 The problems of gaining access to schools for research purposes, in a situation

where the educational system was customarily used to considerable autonomy

from direct state control, and in a situation where individual schools had

considerable autonomy from their local educational authorities (or districts), and

where individual teachers had considerable autonomy within schools.

2 The absence of reliable and valid measures of school institutional climate (a marked

contrast to the situation in the United States for example).

3 The arrested development of British sociology of education’s understanding of the

school as a determinant of adolescent careers, where pioneering work by Hargreaves

(1967) and Lacey (1970), was de-emphasized by the arrival of Marxist perspectives

that stressed the need to work on the relationship between school and society (e.g.

Bowles and Gintis, 1976).

Early work in this field came mostly from a medical and medico-social environment,

with Power (1967) and Power et al. (1972) showing differences in delinquency rates

between schools and Gath (1977) showing differences in child guidance referral rates.

Early work by Reynolds and associates (1976a, 1976b, 1982) into the characteristics

of the learning environments of apparently differentially effective secondary schools,

using group based cross sectional data on intakes and outcomes, was followed by

work by Rutter et al. (1979) on differences between schools measured on the outcomes

of academic achievement, delinquency, attendance and levels of behavioural problems,

utilizing this time a cohort design that involved the matching of individual pupil data

at intake to school and at age 16.

Subsequent work in the 1980s included:

1 ‘Value-added’ comparisons of educational authorities on their academic outcomes

(Department of Education and Science, 1983, 1984; Gray and Jesson, 1987; Gray

et al., 1984; Woodhouse, G., and Goldstein, 1988; Willms, 1987).

2 Comparisons of ‘selective’ school systems with comprehensive or ‘all ability’ systems

(Gray et al., 1983; Reynolds et al., 1987; Steedman, 1980, 1983).

3 Work into the scientific properties of school effects, such as size (Gray, 1981,

An Introduction to School Effectiveness Research

15

1982; Gray et al., 1986), the differential effectiveness of different academic sub

units or departments (Fitz-Gibbon, 1985; Fitz-Gibbon et al., 1989; Willms and

Cuttance, 1985c), contextual or ‘balance’ effects (Willms, 1985b, 1986, 1987)

and the differential effectiveness of schools upon pupils of different characteristics

(Aitkin and Longford, 1986; Nuttall et al., 1989).

4 Small scale studies that focused upon usually one outcome and attempted to relate

this to various within-school processes. This was particularly interesting in the

cases of disruptive behaviour (Galloway, 1983) and disciplinary problems

(Maxwell, 1987; McLean, 1987; McManus, 1987).

Towards the end of the 1980s, two landmark studies appeared, concerning school

effectiveness in primary schools (Mortimore et al., 1988) and in secondary schools

(Smith and Tomlinson, 1989). The Mortimore study was notable for the wide range

of outcomes on which schools were assessed (including mathematics, reading, writing,

attendance, behaviour and attitudes to school), for the collection of a wide range of

data upon school processes and, for the first time in British school effectiveness research,

for a focus upon classroom processes.

The Smith and Tomlinson (1989) study is notable for the large differences shown

in academic effectiveness between schools, with for certain groups of pupils the

variation in examination results between similar individuals in different schools

amounting to up to a quarter of the total variation in examination results. The study

is also notable for the substantial variation that it reported on results in different

school subjects, reflecting the influence of different school departments—out of 18

schools, the school that was positioned ‘first’ on mathematics attainment, for example,

was ‘fifteenth’ in English achievement (after allowance had been made for intake

quality). A third study by Tizard et al. (1988) also extended the research methodology

into the infant school sector.

Ongoing work in the United Kingdom remains partially situated within the same

intellectual traditions and at the same intellectual cutting edges as in the 1980s, notably

in the areas of:

1 Stability over time of school effects (Goldstein et al., 1993; Gray et al., 1995;

Thomas et al., 1997).

2 Consistency of school effects on different outcomes—for example, in terms of

different subjects or different outcome domains such as cognitive/affective

(Goldstein et al., 1993; Sammons et al., 1993b; Thomas et al., 1994).

3 Differential effects of schools for different groups of students, for example, of

different ethnic or socio-economic backgrounds or with different levels of prior

attainment (Goldstein et al., 1993; Jesson and Gray, 1991; Sammons etal., 1993b).

4 The relative continuity of the effect of school sectors over time (Goldstein, 1995a;

Sammons et al., 1995a).

5 The existence or size of school effects (Daly, 1991; Gray et al., 1990; Thomas,

Sammons and Mortimore, 1997), where there are strong suggestions that the size

of primary school effects may be greater than those of secondary schools (Sammons

et al., 1993b, 1995a).

6 Departmental differences in educational effectiveness (Fitz-Gibbon, 1991b, 1992),

researched from the ALIS (A Level Information System) method of performance

The International Handbook of School Effectiveness Research

16

monitoring, which involves rapid feedback of pupil level data to schools. Fitz-

Gibbon and her colleagues have added systems to include public examinations

at age 16, a scheme known as YELLIS (Year 11 Information System), in operation

since 1993, a baseline test for secondary schools (MidYIS, Middle Years

Information System), and PIPS (Performance Indicators in Primary School)

developed by Tymms. These systems now involve the largest datasets in school

effectiveness research in the UK, with a third of UK A level results, one in four

secondary schools in YELLIS and over four thousand primary schools receiving

feedback each year.

Additional recent foci of interest have included:

1 Work at the school effectiveness/special educational needs interface, studying how

schools vary in their definitions, labelling practices and teacher-pupil interactions

with such children (Brown, S. et al., 1996).

2 Work on the potential ‘context specificity’ of effective schools’ characteristics

internationally, as in the International School Effectiveness Research Project

(ISERP), a nine nation study that involved schools in the United Kingdom, the

United States, the Netherlands, Canada, Taiwan, Hong Kong, Norway, Australia

and the Republic of Ireland. The study involved samples of originally 7-year-old

children as they passed through their primary schools within areas of different

socioeconomic status, and was aimed at generating an understanding of those

factors associated with effectiveness at school and class levels, with variation in

these factors being utilized to generate more sensitive theoretical explanations

concerning the relationship between educational processes and pupil outcomes

(Reynolds et al., 1994a, 1995; Creemers et al., 1996). Useful reviews of cross

national studies have also been done (Reynolds and Farrell, 1996) and indeed the

entire issue of international country effects has been a particularly live one in the

United Kingdom currently (see Alexander, R., 1996b for an interesting critique of

the area).

3 The ‘site’ of ineffective schools, the exploration of their characteristics and the

policy implications that flow from this (Barber, 1995; Reynolds, 1996; Stoll and

Myers, 1997).

4 The possibility of routinely assessing the ‘value-added’ of schools using already

available data (Fitz-Gibbon and Tymms, 1996), rather than by utilization of

specially collected data.

5 The characteristics of ‘improving’ schools and those factors that are associated

with successful change over time, especially important at the policy level since

existing school effectiveness research gives only the characteristics of schools that

have become effective (this work was undertaken by Gray et al. (1999)).

6 The description of the characteristics of effective departments (Harris, A. et al.,

1995; Sammons et al., 1997).

7 The application of school effectiveness techniques to sectors of education where

the absence of intake and outcome measures has made research difficult, as in the

interesting foray into the early years of schooling of Tymms et al. (1997), in which

the effects of being in the reception year prior to compulsory schooling were

dramatic (an effect amounting to two standard deviations) and where the school

An Introduction to School Effectiveness Research

17

effects on pupils in this year were also very large (approximately 40 per cent of

variation accounted for). The Department for Education and Employment funded

project into pre-school provision at the Institute of Education, London, should

also be noted as promising.

Further reviews of the British literature are available in Gray and Wilcox (1995),

Mortimore (1991a, 1991b, 1998), Reynolds et al. (1994c), Reynolds and Cuttance

(1992), Reynolds, Creemers and Peters (1989), Rutter (1983a, 1983b), Sammons et

al. (1995b), and Sammons (1999).

It is also important to note the considerable volume of debate and criticism about

school effectiveness now in existence in the United Kingdom (White and Barber, 1997;

Slee et al., 1998; Elliott, 1996; Hamilton, 1996; Pring, 1995; Brown, S., et al., 1995)

concerning its reputedly conservative values stance, its supposed managerialism, its

appropriation by government and its creation of a societal view that educational

failure is solely the result of poor schooling, rather than the inevitable result of the

effects of social and economic structures upon schools and upon children. Given the

centrality of school effectiveness research in the policy context of the United Kingdom,

more of such material can be expected.

SER in the Netherlands

Although educational research has been a well established speciality area in the

Netherlands since the late 1970s, SER did not begin until the mid 1980s although

research on teacher effectiveness, school organization and the educational careers of

students from the very varied selective educational systems of the Netherlands had all

been a focus of past interest (historical reviews are available in Creemers and Knuver,

1989; Scheerens, 1992).

Several Dutch studies address the issue of consistency of effectiveness across

organizational sub-units and across time (stability). Luyten (1994c) found inconsistency

across grades: the difference between subjects within schools appeared to be larger

than the general differences between schools. Moreover, the school effects for each

subject also varied. Doolaard (1995) investigated stability over time by replicating

the school effectiveness study carried out by Brandsma and Knuver (1989).

In the context of the International Educational Assessment Studies (IEA), attention

was given to the development and the testing of an instrument for the measurement

of opportunity to learn, which involves the overlap between the curriculum and the

test (De Haan, 1992; Pelgrum, 1989). In the Netherlands, teachers included in the

Second International Mathematics Study and the Second International Science Study

tended to underrate the amount of subject matter presented to the students.

Furthermore, for particular sub-sets of the items, teachers in the Netherlands made

serious mistakes in judging whether the corresponding subject matter had been taught

before the testing date (Pelgrum, 1989).

Bosker (1990) found evidence for differential effects of school characteristics on

the secondary school careers of low and high SES pupils. High and low SES pupils

similarly profit from the type of school that appeared to be most effective: the cohesive,

goal-oriented and transparently organized school. The variance explained by school

types, however, was only 1 per cent. With respect to the departmental level, some

The International Handbook of School Effectiveness Research

18

support for the theory of differential effects was found. Low SES pupils did better in

departments characterized by consistency and openness, whereas high SES pupils

were better off in other department types (explained variance 1 per cent).

A couple of studies have covered specific factors associated with effectiveness,

including a study on grouping procedures at the classroom level (Reezigt, 1993).

Reezigt found that the effects of grouping procedures on student achievement are

either the same, or in some cases even negative, as compared to the effects of whole

class teaching. Differential effects, (i.e. interaction effects of grouping procedures with

student characteristics on achievement or attitudes), are hardly ever found in studies

from the Netherlands. The effects of grouping procedures must to some extent be

attributed to the classroom context and instructional characteristics. Ros (1994) found

no effects of cooperation between students on student achievement. She analysed the

effects of teaching behaviour during peer group work, using multilevel analysis.

Monitoring, observing and controlling students and having frequent short contacts

with all groups, not only promote on task behaviour but also effective student

interaction.

Freibel (1994) investigated the relationship between curriculum planning and student

examination results. His survey study provided little empirical support for the

importance of proactive and structured planning at the school and departmental levels.

In a case study including more and less effective schools, he found an indication that

educational planning at the classroom level, in direct relation to instruction, may

have a slightly positive effect on student results. Van de Grift developed instruments

for measuring the instructional climate in educational leadership. With respect to

educational leadership he found that there is a difference between his earlier results

(van de Grift, 1990) and later findings. Principals in the Netherlands nowadays show

more characteristics of educational leadership and these characteristics are related to

student outcomes (Lam and van de Grift, 1995), whereas in his 1990 study such

effects were not shown.

Several studies have been concerned with the relationship between variables and

factors of effectiveness at the school and classroom levels, and even at the contextual

level in relation to student outcomes. Brandsma (1993) found empirical evidence for

the importance of some factors like evaluation of pupils and the classroom climate,

but mostly they could not confirm factors and variables mentioned in school

effectiveness studies in the UK and the USA. Hofman (1993) found empirical evidence

for the importance of school boards with respect to effectiveness, since they have a

contextual impact on the effectiveness of the schools they govern. It seems important

that school boards look for ways of communication with the different authority levels

in the school organization, so that the board’s policy-making becomes a mutual act of

the school board and the relevant participants in the school. Witziers (1992) found

that an active role of school management in policy and planning concerning the

instructional system are beneficial to student outcomes. His study also showed the

effects of differences associated with teacher cooperation between departments.

In the mid-1990s attention was given to studies that are strongly related to less or

more explicit models of educational effectiveness. In the studies carried out at the

University of Twente, the emphasis was provided on the organizational level, focusing,

for instance, on the evaluation policy of the school, in relationship to what happens

at the instructional level. In Groningen, ideas about instructional effectiveness provide

An Introduction to School Effectiveness Research

19

the main perspectives (Creemers, 1994). Here the emphasis is more on the classroom

level and grouping procedures and the media for instruction, like the teacher and

instructional materials and the classroom-school interface. These studies include more

recently developed theories, ideas, opinions, and ideologies with respect to instruction

and school organization, like the constructivist and restructuring approaches.

A cutting edge study related to both the instructional and school level carried out

by the Universities of Twente and Groningen was an experimental study aimed at

comparing school-level and classroom-level determinants of mathematics achievement

in secondary education. It was one of the rare examples of an effectiveness study in

which treatments are actively controlled by researchers. The experimental treatments

consisted of training courses that teachers received and feedback to teachers and

pupils. Four conditions were compared: a condition where teachers received special

training in the structuring of learning tasks and providing feedback on achievement

to students; a similar condition to which consultation sessions with school leaders

was added; a condition where principals received feedback about student achievement;

and a no-treatment condition. Stated in very general terms, the results seemed to

support the predominance of the instructional level and of teacher behaviours

(Brandsma et al., 1995).

Table 1.1 presents an overview of extant Dutch school effectiveness studies in

primary and secondary education. In the columns the total number of significant

positive and negative correlations between these conditions and educational attainment

are shown. The basic information for each of these studies was presented in Scheerens

and Creemers (1995). The main organizational and instructional effectiveness

enhancing conditions, as known from the international literature, are shown in the

left-hand column.

The total number of effectiveness studies presented in Table 1.1 in primary education

is 29, while the total of studies in secondary education is 13, thus indicating that

primary education is the main educational sector for effectiveness studies in the

Netherlands. Primary and secondary education schools with a majority of lower SES-

pupils and pupils from minority groups comprise the research sample of about 25 per

cent of the studies.

The line near the bottom of the table that shows the percentage of variance that is

between schools gives an indication of the importance of the factor ‘school’ in Dutch

education. The average percentage in primary schools is 9, and in secondary schools

13.5. It should be noted that this is an average not only over studies, but also over

output measures. Generally schools have a greater effect on mathematics than on

language/reading, as suggested in Fitz-Gibbon (1992).

The between school variances, in most cases, have been computed after adjustment

for covariables at the pupil level, so they indicate the ‘net-influence’ of schooling

while controlling for differences in pupil intake. Since at the time these studies were

conducted most primary schools had just one class per grade level, the school and

classroom levels in primary schools usually coincide. A final note with respect to the

measures of between school variances shown in Table 1.1 is that only in some cases

have intra-class correlations been computed, which will generally be higher than the

between school variances (since the intra-class correlations are computed as the ratio

of the between school variance and the sum of the between school and between pupil

variance).

The International Handbook of School Effectiveness Research

20

When considering the factors that ‘work’ in Dutch education, the conclusion from

Table 1.1 must be that the effective schools model is not confirmed by the data. The

two conditions thought to be effectiveness enhancing that are most commonly found

to have a significant positive association with the effectiveness criteria in primary

schools (structured teaching and evaluation practices), are found in no more than five

out of 29 studies. Moreover, ‘other’ factors predominate both in primary and secondary

education as being associated with effectiveness. It is also striking that if an effect of

instructional leadership and differentiation is found, it is often negative. The set of

‘other’ characteristics is very heterogeneous. The most frequently found one is

‘denomination’, often (but not always) with an advantage for ‘church schools’ with a

Roman Catholic or Protestant orientation over public schools. The large number of

independent variables in most studies, and the fact that only several of these are

statistically significant (sometimes dangerously close to the 5 per cent that could have

been expected on the basis of chance), add to the feeling of uneasiness that the figures

in Table 1.1 convey concerning the fruitfulness of research driven by the conventional

American school effectiveness model. Some consolation can be drawn from the fact

that the most positive results come from the most sophisticated study in this area

(Knuver and Brandsma, 1993). In recent years, perhaps the other major contribution

of Dutch SER is the generation of an ‘educational effectiveness’ paradigm which

subsumes within it the material from both teacher/instructional effectiveness and that

on school effectiveness into a coherent, middle range set of theoretical postulates (for

further examples see Scheerens and Bosker, 1997).

Table 1.1 Dutch school effectiveness studies: Total number of positive and negative correlations

between selected factors and educational achievement

Note: Not all variables mentioned in the columns were measured in each and every study.

Source: Creemers and Osinga, 1995.

An Introduction to School Effectiveness Research

21

SER in Australia

Australians were initially reluctant to become involved in SER largely because of a

concern about standardized testing procedures. The emphasis upon student

performance on standardized achievement tests as the key measurement of an ‘effective’

school, as proposed by Edmonds (1979a and b) was met with various levels of

scepticism and there were a number of Australian researchers such as Angus (1986a;

1986b), Ashenden (1987) and Banks (1988) who clearly indicated their concern that

a concentration on effectiveness as it had been originally defined by Edmonds meant

a diminution of concern about other equally relevant educational issues such as equality,

participation and social justice. This is ample evidence that although there was no

commonly agreed upon understanding of what an effective school was, the critics

were sure of what it was not.

There was little early research that considered issues of school effectiveness. Mellor

and Chapman (1984), Caldwell and Misko (1983), Hyde and Werner (1984), Silver

and Moyle (1985) and Caldwell and Spinks (1986) all identified characteristics of

school effectiveness, but it could be argued that these studies were more related to

school improvement issues than to school effectiveness research. However, these studies

were forerunners of many later studies that used school effectiveness as a basis for

developing changed perceptions of schooling.

On the international front, Caldwell and Spinks (1988, 1992) introduced the notion

of self-managing schools, which has served as a model for many of the structural

changes being made by educational systems in a number of countries, including

Australia, New Zealand and the UK. Chapman’s work for the OECD and UNESCO

has been internationally recognized for its contribution to the debate on principals

and leadership (1985, 1991a), decentralization (1992) and resource allocation (1991b).

Much of the research in the area of school effectiveness has been driven by the

need for governments to justify some of the changes being made towards relating

education more closely to the needs of the Australian economy. Consequently, a number

of large scale research projects over the past few years have been commissioned by, or

are being supported by, national and state government.

In attempting to establish improvements at the school level, the Australian Education

Council (AEC) initiated the Good Schools Strategy. The first stage of the strategy was

the Effective Schools Project, a research activity commissioned in 1991. It was

conducted by the Australian Council for Educational Research (ACER) and more

than 2300 Australian schools (almost 30 per cent) responded to an open-ended

questionnaire which sought advice from school communities about aspects of school

effectiveness which they thought were important in determining effectiveness levels.

The report of this research (McGaw et al., 1992) found the things that made schools

effective were said to be staff (in 65 per cent of the responses); ethos (58 per cent);

curriculum (52 per cent) and resources (48 per cent). In summarizing their findings,

the researchers identified the following implications for educational policy makers:

• Accountability is a local issue, a matter of direct negotiation between schools and

their communities. There is remarkably little interest in large-scale testing

programmes, national profiles and other mechanisms designed to externalise the

accountability of schools.

The International Handbook of School Effectiveness Research

22

• Problems of discipline and behaviour management do not appear as major barriers

to school effectiveness and school improvement. They exist, of course, but only in

a minority of responses, and even there rarely as a major concern.

• School effectiveness is about a great deal more than maximising academic

achievement. Learning, and the love of learning; personal development and self-

esteem; life skills, problem solving and learning how to learn; the development of

independent thinkers and well-rounded, confident individuals, all rank as highly

or more highly in the outcomes of effective schooling as success in a narrow range

of academic disciplines.

• The role of central administrators is to set broad policy guidelines and to support

schools in their efforts to improve, particularly through the provision of professional

support services (McGaw et al., 1992, p.174).

In addition to this large scale research, other national studies were designed to improve

the impact of school for individual students. In 1992 a national study on educational

provision for students with disabilities was commissioned by the AEC as part of its

attempt to achieve the national goals related to equity. The study aimed to evaluate

the effectiveness of the current programme and to establish a range of best practices

for the 56,000 people who were identified as disabled.

The Primary School Planning Project (PSPP) was designed to document current

practice in school-based planning in primary schools and also to gain insights into

practice in specific primary schools (Logan et al., 1994). The study used qualitative

techniques to develop a national picture using 2200 schools and including principals,

associated administrators, teachers and parents who had been involved in school

planning activities.

A number of studies which are related to improving the effectiveness of schools

have occurred in the last few years. These have considered a variety of issues including

the concept of school effectiveness (Banks, 1992; Hill, 1995; Townsend, 1994, 1997)

and the concept of school quality (Aspin et al., 1994; Chapman and Aspin, 1997),

various studies on classroom effectiveness including identifying the factors affecting

student reading achievement (Rowe, 1991; Rowe and Rowe, 1992; Crévola and

Hill, 1997), the characteristics of schools in which students perform well in both